Sub-Millimeter Waveguides Are Reshaping AR Glasses: The Future of Lightweight, Immersive Wearables

The promise of augmented reality (AR) has captured the imagination of technologists and consumers alike for over a decade. With ambitions ranging from immersive entertainment and hands-free computing to industrial maintenance and real-time medical imaging, AR glasses are often heralded as the next evolution in personal technology—poised to succeed the smartphone as the dominant interface of the future. Yet despite immense capital investment, high-profile prototypes, and iterative product releases, widespread adoption of AR glasses remains elusive. The fundamental challenge? The optics.

At the heart of any AR system is the waveguide—an optical component responsible for projecting virtual images into the wearer’s field of view. Waveguides function by channeling light from micro-displays through a transparent substrate, enabling the illusion of computer-generated objects overlaying the real world. However, the conventional waveguide designs used in today’s AR glasses are inherently bulky, inefficient, and constrained by material limitations. They are frequently the reason AR glasses are still too thick, heavy, and power-hungry to be worn comfortably for extended periods. This "optical bottleneck" has become the primary hurdle impeding AR’s mainstream potential.

Recent breakthroughs in waveguide technology, particularly the development of sub-millimeter waveguides, are now positioned to overcome this critical obstacle. These ultrathin optical components—some measuring just fractions of a millimeter—promise dramatic reductions in weight and size while enhancing brightness, clarity, and energy efficiency. More importantly, sub-millimeter waveguides offer the potential to radically alter the industrial design of AR glasses, allowing manufacturers to build truly lightweight, sleek, and aesthetically viable devices that feel no more cumbersome than a regular pair of spectacles.

This technological advancement stems from interdisciplinary innovation across photonics, nanofabrication, and materials science. Engineers and researchers have begun to replace traditional diffractive optics with novel designs that rely on metasurfaces, photonic crystals, and nanostructured materials. These advances enable unprecedented control of light at sub-wavelength scales, compressing optical pathways into thinner, more efficient substrates. With early-stage prototypes demonstrating significant performance gains, the industry is inching closer to the holy grail of “all-day AR”—glasses so comfortable and unobtrusive they can be worn as naturally as prescription eyewear.

The implications of sub-millimeter waveguide technology are far-reaching. Beyond enabling thinner and lighter AR glasses, this development unlocks possibilities for improved battery efficiency, better integration of eye-tracking and sensor systems, and enhanced field-of-view (FOV) without image distortion or loss of clarity. In the context of consumer electronics, such a leap in optical design has the potential to redefine user expectations and catalyze a new phase in the wearable technology market.

This blog post offers an in-depth exploration of the breakthrough in sub-millimeter waveguides and its implications for AR glasses. We begin by understanding the fundamentals of waveguide optics in augmented reality and the limitations of traditional designs. Next, we examine the engineering innovations behind the new sub-millimeter waveguide approach, analyzing the materials, fabrication techniques, and performance metrics that distinguish it. We then explore how this technology is poised to transform the industrial design of AR glasses, improve wearability, and unlock more immersive user experiences.

In subsequent sections, we will look at how industry players are incorporating this innovation, including key prototypes, partnerships, and expected market impact. Finally, we will conclude by reflecting on the long-term vision enabled by this leap forward in optical design and what it means for the future of spatial computing.

As the race to deliver the next generation of AR glasses intensifies, the evolution of waveguide technology will be a decisive factor. While advances in processors, displays, and AI are critical, none can reach their full potential without a corresponding leap in optics. With the advent of sub-millimeter waveguides, that leap may finally be underway—ushering in a new era of wearable computing that is lighter, more immersive, and more indistinguishably woven into daily life than ever before.

Understanding Waveguide Technology in Augmented Reality

The evolution of augmented reality (AR) devices has largely been driven by advances in computational hardware, microdisplays, and sensor integration. However, the optical systems that underpin AR glasses are often less visible yet equally—if not more—critical to the overall user experience. Among these, the waveguide serves as the foundational element in modern AR optics, enabling the delivery of computer-generated imagery into the human visual field. To understand the significance of the recent shift toward sub-millimeter waveguides, it is essential first to examine the traditional role of waveguides in AR and the limitations they impose.

At its core, a waveguide is a physical structure that guides light along a desired path. In the context of AR glasses, waveguides are used to transport light from a microdisplay (usually situated at the edge of the lens) across a transparent substrate to the user's eye. This process involves complex manipulation of light through reflection, refraction, and diffraction, allowing digital content to appear as if it is embedded within the real-world environment. The illusion of augmented visuals relies on the precise redirection and propagation of light beams so that they are perceived as coming from various distances and angles.

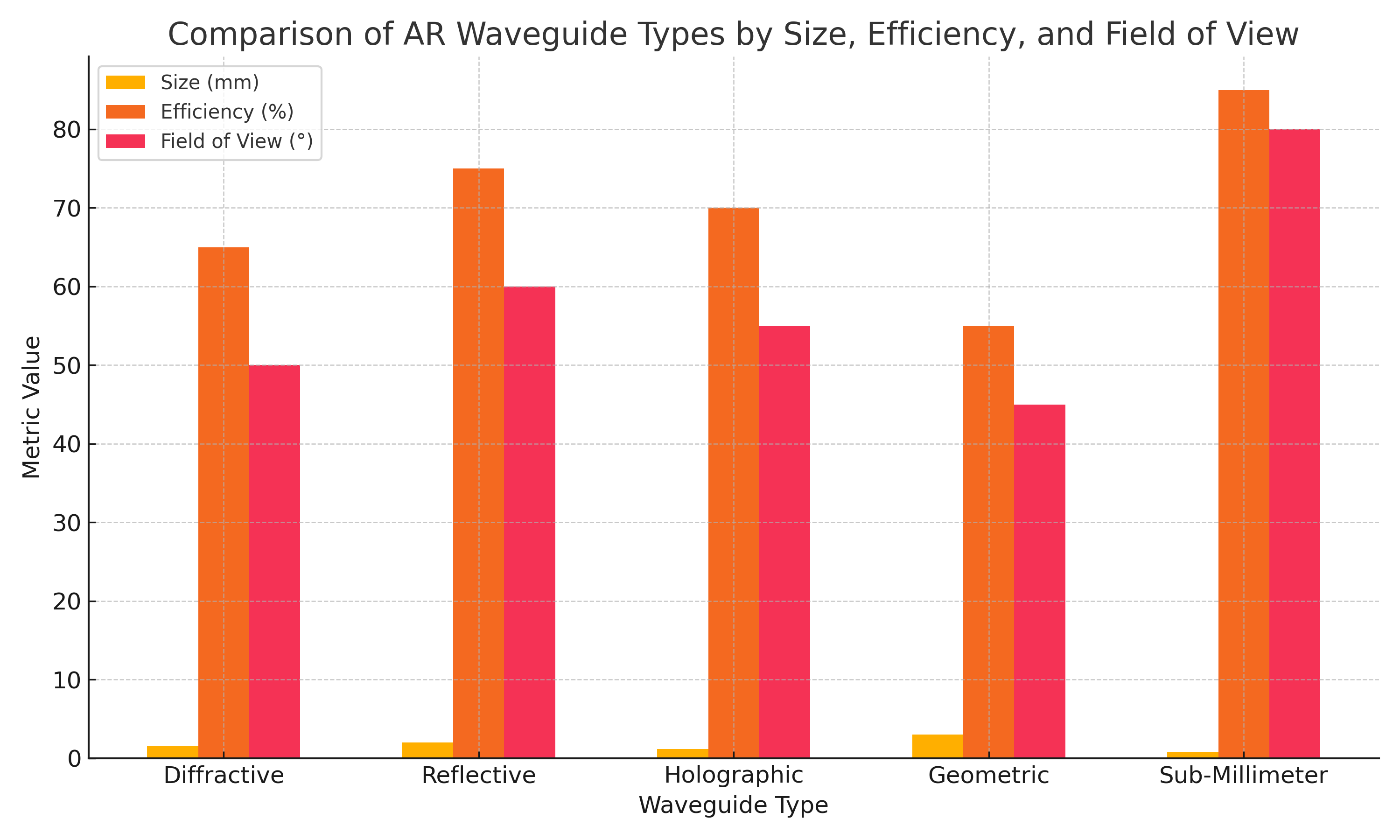

Waveguide technology has evolved over time, and today, several types are employed in AR systems. The most common categories include diffractive waveguides, reflective waveguides, holographic waveguides, and geometric waveguides, each with distinct physical principles and engineering trade-offs.

Diffractive Waveguides

Diffractive waveguides are among the most widely used in current AR glasses. These waveguides rely on input and output diffraction gratings embedded within a transparent medium to bend and propagate light. By exploiting the interference patterns of light, they allow compact integration and relatively high levels of image clarity. However, their major drawbacks include chromatic aberration, limited brightness under outdoor lighting conditions, and energy inefficiency due to light leakage.

Reflective Waveguides

Reflective waveguides utilize mirrors or prisms to guide light within the optical substrate. While this method offers excellent color fidelity and reduced image distortion, it tends to require more space and results in bulkier lenses. The complexity of incorporating multiple mirrors also makes it harder to miniaturize such systems for lightweight wearable devices.

Holographic Waveguides

Holographic optical elements (HOEs) represent another class of waveguides, where recorded holograms serve as input and output couplers. HOEs provide a thinner profile than reflective systems and can be tuned to specific wavelengths for improved efficiency. However, they are sensitive to ambient lighting and often suffer from reduced contrast and display uniformity.

Geometric Waveguides

Geometric waveguides, often used in early smart glasses, rely on conventional optics such as prisms and lenses to project images directly into the user’s eye. While effective in prototype systems, their inherent size and weight make them impractical for consumer-grade AR wearables.

Despite the diversity in approach, all these traditional waveguide technologies share a common constraint: physical thickness. To adequately route and expand light, waveguides require a certain volume of material and space, which limits the design flexibility and miniaturization potential of AR glasses. The result is a trade-off between optical performance and wearability—heavier glasses with limited field-of-view (FOV), narrow eye-boxes (the optimal viewing region), and power inefficiencies.

This problem is compounded when attempting to scale up for mass production. As AR glasses aim for mainstream adoption, manufacturers must produce lenses that are not only optically accurate but also manufacturable at scale, durable, and suitable for all-day use. Traditional waveguide fabrication methods—such as precision grinding, optical bonding, and laser etching—can be cost-intensive and difficult to miniaturize further without degrading image quality.

Moreover, existing waveguides often limit the field of view, a crucial metric that defines how immersive an AR experience can be. Most commercial AR glasses today offer FOVs of around 40°–50°, which feels narrow compared to natural human vision (~120°). Expanding this view typically increases the size and thickness of the waveguide, creating a paradox: better visuals require more space, yet the demand is for sleeker, lighter eyewear.

It is against this backdrop of optical compromise that sub-millimeter waveguides emerge as a potentially transformative solution. These next-generation optical elements promise to resolve long-standing constraints by reducing waveguide thickness to below 1 millimeter, without sacrificing key visual parameters such as brightness, resolution, and FOV. By leveraging advanced nanophotonics and new materials science approaches, sub-millimeter waveguides can confine and steer light more precisely, opening the door to revolutionary changes in both design and performance.

Sub-millimeter waveguides represent a departure from traditional macro-scale optics, utilizing metasurfaces and nano-patterned structures to manipulate light at scales smaller than the wavelength itself. This allows for flat optical components that function as lenses, mirrors, and beam splitters simultaneously, with tunable optical properties engineered at the sub-wavelength level. These advancements not only reduce the physical footprint of AR optics but also enhance the control over light paths, polarization, and spectral behavior—elements that are critical to achieving high-fidelity AR experiences.

In summary, waveguides are the unsung heroes of AR glasses, serving as the gateway through which virtual content is brought into the user’s world. The current generation of waveguides, while functional, imposes hard limits on device design, usability, and visual immersion. As the AR industry matures, the move toward sub-millimeter waveguides signals a foundational shift—one that challenges the traditional assumptions of optical design and reimagines what is possible in wearable augmented reality.

The Breakthrough: Engineering the Sub-Millimeter Waveguide

The advent of sub-millimeter waveguide technology represents a pivotal milestone in the development of augmented reality (AR) eyewear. Historically, the design of AR glasses has been constrained by the limitations of optical components—particularly the waveguides responsible for image delivery. Traditional waveguides, while functional, imposed significant trade-offs in terms of size, power efficiency, and image quality. Recent engineering breakthroughs, however, have enabled the creation of waveguides measuring less than one millimeter in thickness, achieving superior optical performance while radically transforming the form factor of AR devices.

This breakthrough stems from multidisciplinary innovation at the intersection of nanophotonics, advanced materials, and precision fabrication. The transition from millimeter-scale to sub-millimeter waveguides has not been a matter of simple scaling but rather a fundamental rethinking of how light can be manipulated at ultra-small dimensions. At the core of this innovation lies the development of metasurfaces, photonic crystals, and nano-patterned optical elements—engineered surfaces that provide unprecedented control over the phase, amplitude, and polarization of light.

Metasurfaces: Rewriting Optical Physics

A key enabler of sub-millimeter waveguide performance is the implementation of metasurfaces—planar structures composed of sub-wavelength-scale elements that behave as optical antennas. Unlike traditional optics that rely on refraction or reflection through bulk materials, metasurfaces manipulate electromagnetic waves via localized interactions. Each nanostructure, or "meta-atom," is designed to impart a specific phase shift, enabling the wavefront of light to be shaped precisely.

In the context of waveguides, metasurfaces serve as input and output couplers that direct light into and out of a substrate with extreme precision. Because they operate at scales smaller than the wavelength of visible light, these surfaces eliminate the need for bulky prisms or gratings. As a result, waveguides built with metasurface couplers can achieve ultra-thin profiles while maintaining high optical throughput and minimal signal distortion.

Furthermore, metasurface-based waveguides offer broadband operation, supporting full-color displays without the chromatic aberrations that plague diffractive systems. The tunability of nanostructure geometries also allows for polarization-insensitive operation, thereby enhancing eye-box stability and reducing image flicker—two critical parameters for all-day wearability in AR devices.

Photonic Crystals and Nano-Patterning

Another critical component of sub-millimeter waveguide engineering involves the integration of photonic crystals—materials structured on the scale of optical wavelengths to create photonic bandgaps. These structures control the propagation of light through periodic refractive index variations, enabling highly confined light paths within a thin substrate. By adjusting the lattice geometry, photonic crystal waveguides can support directional control of light with minimal loss, enabling compact and high-efficiency AR displays.

When combined with nano-patterning techniques, such as electron-beam lithography or nanoimprint lithography, manufacturers can fabricate intricate optical elements with nanoscale resolution. These patterns are embedded within or atop transparent substrates (e.g., glass or polymer layers) to define light propagation paths with remarkable accuracy. This level of precision eliminates optical leakage and ghosting, which are common drawbacks of traditional systems.

In some implementations, holographically recorded nanostructures are also employed to create volume holographic waveguides with enhanced spectral selectivity. These hybrid systems combine the strengths of holography and nanophotonics, resulting in waveguides that offer high image fidelity and reduced ambient light sensitivity.

Material Advancements: High-Index and Flexible Substrates

The success of sub-millimeter waveguides is also closely linked to innovations in optical materials. Traditional waveguides rely on glass or plastic substrates with modest refractive indices, which limit the degree of light confinement. By contrast, modern designs incorporate high-refractive-index materials, such as titanium dioxide (TiO₂), gallium nitride (GaN), or custom-engineered polymers. These materials enable tighter light confinement, allowing for thinner optical pathways without compromising image quality.

In addition, the emergence of flexible and formable substrates opens new possibilities for curved waveguides that conform to natural lens shapes. This capability supports not only thinner lenses but also more ergonomic and stylish AR glasses that resemble conventional eyewear. The ability to curve waveguides without inducing optical distortion is particularly valuable in developing wraparound displays or seamless lens integration.

Advanced Manufacturing Techniques

Manufacturing sub-millimeter waveguides at scale has long been a challenge, but recent developments in production techniques have brought the technology closer to commercialization. Among the most promising methods is nanoimprint lithography (NIL)—a high-throughput process that stamps nanoscale patterns onto substrates with excellent fidelity. NIL combines low-cost replication with high precision, making it suitable for mass production of complex optical elements.

Other fabrication methods include laser direct writing, which enables customizable waveguide architectures with minimal tooling, and roll-to-roll processing, which facilitates continuous production on flexible substrates. These techniques are particularly attractive for consumer electronics manufacturers seeking to reduce costs while maintaining product differentiation.

Moreover, hybrid approaches that combine additive and subtractive nanofabrication allow for complex multi-layered designs, enabling advanced waveguide configurations such as stackable or multiplexed channels for multi-depth or wide-field AR experiences.

Performance Metrics and Technical Benefits

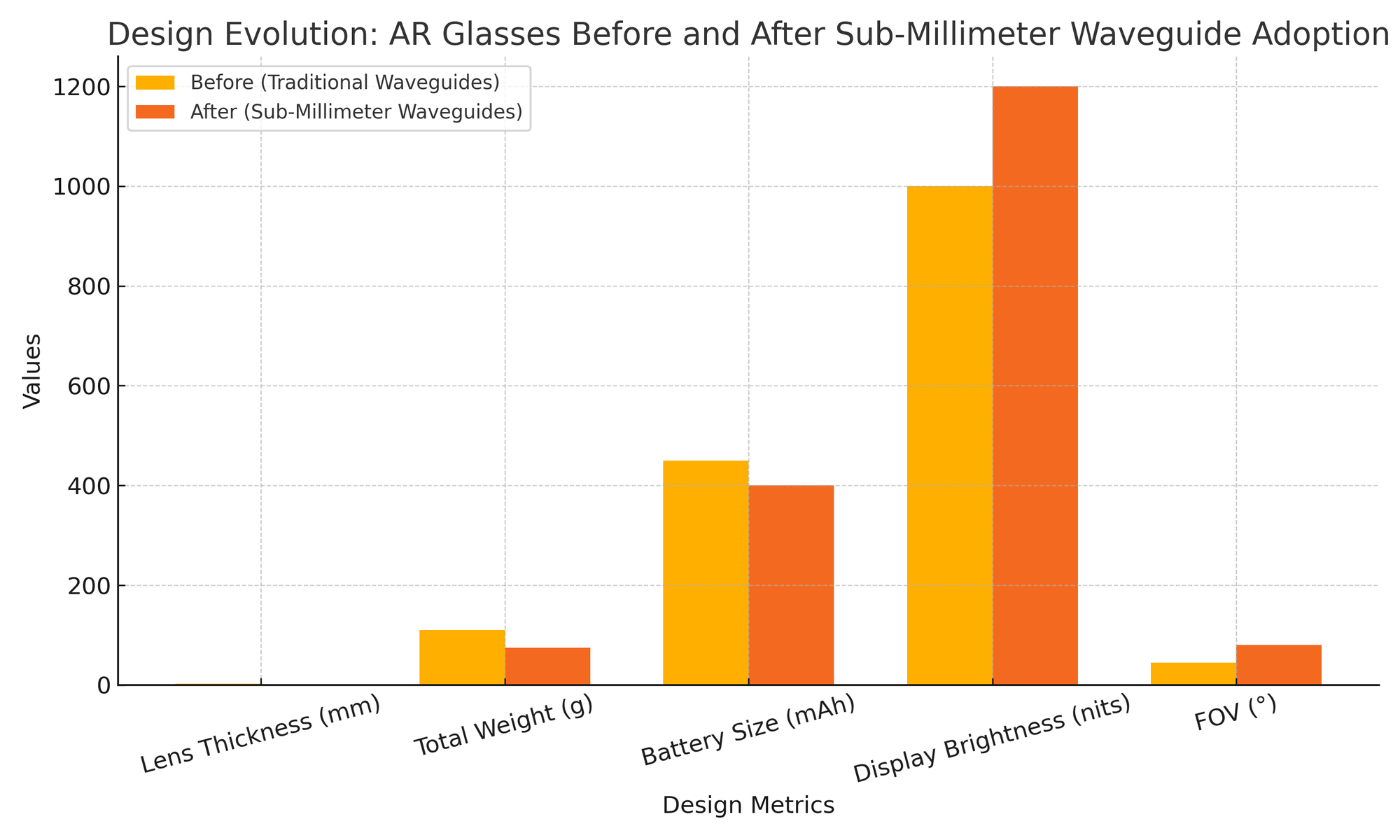

The shift to sub-millimeter waveguides yields quantifiable improvements across several key performance metrics. In terms of thickness, new waveguide designs measure as little as 0.5–0.8 mm—less than half the thickness of most diffractive systems. This reduction directly translates to lighter and slimmer AR glasses, which improves user comfort and expands the range of use cases.

Sub-millimeter waveguides also demonstrate higher transmission efficiency, often exceeding 80–85%, compared to 60–70% in traditional systems. This improvement reduces power consumption by minimizing the brightness requirements of the microdisplay, which is critical for battery-powered wearable devices.

In addition, these waveguides support larger fields of view, with some prototypes achieving 70°–80°, compared to the 40°–50° range typical of current consumer-grade AR glasses. A wider FOV increases the sense of immersion and spatial realism, making digital overlays more compelling and contextually integrated.

Lastly, visual uniformity and eye-box stability are enhanced, reducing visual artifacts, motion sickness, and the need for constant eye alignment. These factors contribute to a more seamless and comfortable user experience, essential for long-duration AR use in professional or daily-life scenarios.

The engineering of sub-millimeter waveguides marks a fundamental departure from traditional optical paradigms. Through the integration of metasurfaces, nanophotonic structures, advanced materials, and scalable manufacturing techniques, this innovation has resolved long-standing barriers in AR design. The result is a new class of ultra-thin, high-performance optical components that not only enhance display quality but also unlock a new era of ergonomic and aesthetically viable AR glasses.

Implications for AR Glasses Design and Wearability

The evolution of waveguide technology from millimeter-scale components to sub-millimeter precision optics is not merely a technical refinement—it is a profound enabler of design transformation in augmented reality (AR) glasses. While advances in microdisplays, batteries, and edge computing have steadily progressed, it is the miniaturization of optical waveguides that promises to unlock the long-awaited potential of AR as a truly wearable, all-day computing platform. This section examines how sub-millimeter waveguides are reshaping the industrial design, ergonomics, and integration strategies of AR glasses, with significant implications for user adoption, aesthetic viability, and functional versatility.

Industrial Design Revolution: Toward Sleek, Minimalist Profiles

One of the most immediate and visible impacts of sub-millimeter waveguides is the dramatic reduction in the physical size and weight of AR glasses. Conventional waveguides typically measure between 1.5 and 3 millimeters in thickness, contributing significantly to the bulkiness of the lens assembly. By contrast, sub-millimeter waveguides can be manufactured at thicknesses below 0.8 millimeters, allowing for substantially thinner optical stacks without compromising image clarity or field of view.

This reduction enables AR glasses to resemble conventional eyewear more closely—a crucial factor in achieving mainstream consumer adoption. Bulky, tech-heavy designs have long been a psychological and aesthetic barrier to AR uptake, particularly in non-enterprise environments. Sub-millimeter waveguides facilitate the creation of lightweight, stylish frames that can be worn discreetly in public or professional settings without drawing unwanted attention or causing user discomfort.

In addition, the slimmer profile allows for the distribution of internal components—such as batteries, sensors, and processors—across the glasses’ temples and bridge with greater design flexibility. This not only aids in thermal management and weight balance but also supports modularity, which is valuable for customizing glasses to specific user needs or environments.

Ergonomics and Comfort: Enabling All-Day Wear

Comfort is a critical determinant of wearability, and sub-millimeter waveguides offer significant advantages in this regard. Thinner lenses reduce the overall weight of the glasses, alleviating pressure points around the nose and ears. For context, every gram matters when it comes to prolonged use—most users will reject a device that feels heavy or intrusive after even a short period.

The use of sub-millimeter optics also improves the weight distribution across the frame. Traditional designs often concentrate weight around the lens housing, leading to front-heavy configurations that cause fatigue and slippage. With compact optical modules, designers can reposition the weight to achieve better equilibrium, resulting in glasses that feel more natural and require fewer adjustments throughout the day.

Moreover, the refined optics contribute to enhanced visual comfort. Sub-millimeter waveguides provide improved brightness uniformity, reduced optical aberrations, and wider eye-boxes—meaning users no longer need to hold their heads in a fixed position to maintain image alignment. This feature is especially beneficial for users who require dynamic movement or multitasking throughout the day, such as warehouse workers, field technicians, or medical professionals.

The reduction in motion-induced visual artifacts also contributes to a decrease in visual fatigue and motion sickness, common complaints associated with earlier-generation AR devices. By providing stable, clear imagery that moves seamlessly with the user’s field of vision, sub-millimeter waveguides support a more organic interaction between digital overlays and the real world.

Battery Efficiency and Power Management

Sub-millimeter waveguides also contribute indirectly to improved battery life, a perennial challenge in wearable technology. Due to their higher light transmission efficiency—often exceeding 85% compared to the 60–70% range typical of older systems—these waveguides require significantly less power from the microdisplay to achieve the same level of brightness. Lower display power draw allows manufacturers to either reduce battery size (further reducing weight) or extend usage time without increasing form factor.

This energy efficiency has cascading benefits across the system architecture. Smaller batteries mean less heat generation, which reduces the need for active cooling solutions. Passive thermal management becomes feasible, enabling sealed, waterproof enclosures and better long-term durability. Additionally, excess power can be reallocated to support advanced features such as real-time object recognition, edge-based AI computation, or sensor fusion, without sacrificing runtime.

Seamless Integration of Sensors and Modules

The miniaturization of the optical stack enables better integration of supplementary hardware components, which are essential for delivering intelligent, context-aware AR experiences. These include eye-tracking modules, ambient light sensors, depth cameras, and microphones. In traditional AR glasses, finding space for such components without adding bulk or interfering with optical pathways has been a major design challenge.

Sub-millimeter waveguides free up valuable real estate in the lens assembly and frames, enabling tighter packaging and alignment of multiple subsystems. For example, eye-tracking cameras can now be embedded closer to the lens surface without obstructing the user’s view or increasing device thickness. Similarly, infrared projectors used for spatial mapping can be integrated directly within the temple arms, contributing to a more seamless form factor.

This enhanced integration capability is especially valuable in professional applications, where AR glasses must meet strict performance, safety, and hygiene standards. In surgical environments, for instance, the ability to embed high-precision sensors within a slim, easy-to-clean frame is critical. Likewise, in industrial maintenance, ruggedness and comfort must be balanced with high-resolution environmental awareness.

Aesthetic Viability and Consumer Acceptance

Beyond technical and ergonomic considerations, sub-millimeter waveguides have a profound impact on the social acceptability of AR wearables. For AR glasses to become mainstream, they must transcend their identity as "tech gadgets" and enter the realm of fashion and lifestyle accessories. Consumers are unlikely to adopt devices that appear overly technical, awkward, or conspicuously futuristic.

By enabling thinner, more elegant designs, sub-millimeter waveguides allow AR glasses to match the aesthetic expectations of contemporary eyewear. Designers can create frames that are not only functional but also fashionable, appealing to style-conscious users who demand personalization and visual harmony. This design freedom is particularly important in markets such as luxury retail, health and wellness, and education, where appearance plays a central role in product acceptance.

Some manufacturers are already collaborating with fashion houses and eyewear brands to explore co-branded AR devices that combine high-performance optics with design excellence. The convergence of advanced engineering and consumer aesthetics will be a defining factor in the long-term success of AR glasses.

In summary, the implications of sub-millimeter waveguide technology extend far beyond the optics lab. By enabling lighter, slimmer, and more comfortable AR glasses, these next-generation components address long-standing design and usability challenges that have hindered adoption. The result is a new class of devices that not only perform better but also feel, look, and behave like everyday eyewear—bringing the vision of ubiquitous AR one step closer to reality.

Industry Adoption, Key Players, and Prototypes

As sub-millimeter waveguide technology progresses from laboratory research to real-world deployment, its adoption by key industry players signals a new chapter in augmented reality (AR) innovation. While the underlying science is groundbreaking, the true impact of sub-millimeter waveguides will be measured by their integration into commercially viable AR devices. This section explores how leading companies, startups, and research institutions are embracing this optical advancement, what prototypes and product roadmaps are emerging, and how this shift is reshaping the competitive landscape.

Technology Commercialization and Industry Momentum

The transition from traditional to sub-millimeter waveguides represents not just a technological upgrade but also a reorientation of product design, manufacturing strategy, and user engagement. AR industry leaders have recognized that optical systems are no longer peripheral—they are core differentiators. As a result, the pursuit of sub-millimeter waveguides has become a strategic priority for major players seeking to dominate the next generation of wearable computing.

The momentum behind commercialization is driven by growing demand across multiple sectors: consumer electronics, enterprise solutions, defense, healthcare, and industrial automation. Each of these domains imposes unique requirements—ranging from durability and performance to comfort and discretion—all of which benefit from the enhanced properties of sub-millimeter optical components.

Leading Corporations Investing in Sub-Millimeter Waveguides

Several prominent technology firms have made visible moves toward adopting or developing sub-millimeter waveguide technology, often through partnerships, acquisitions, or in-house research initiatives.

Meta

Meta’s investment in AR through its Reality Labs division is well documented. The company has filed numerous patents related to compact optical engines, nanostructured waveguides, and holographic elements. In 2023, Meta researchers published papers detailing metasurface-based optical combiners that significantly reduce lens thickness. Meta’s long-term vision of lightweight, socially acceptable AR glasses—eventually replacing smartphones—hinges on achieving breakthroughs in optical miniaturization, with sub-millimeter waveguides playing a central role.

Following the relaunch of Google Glass as an enterprise product, Google has shifted focus toward immersive wearable platforms. The company’s acquisition of North, a startup known for its lightweight smart glasses with custom-built optics, indicates a commitment to advanced waveguide design. Google has also collaborated with academic institutions on photonic crystal integration, indicating its interest in scalable sub-millimeter solutions.

Apple

Although Apple remains secretive about its AR roadmap, several job postings and patent filings suggest the company is developing next-generation optics for its rumored AR headset and smart glasses. Apple’s historical emphasis on thinness, elegance, and user experience makes sub-millimeter waveguides an ideal fit. Reports have surfaced suggesting Apple is investing heavily in nanoimprint lithography capabilities, which are key to producing ultra-thin optical components.

Microsoft

As the creator of the HoloLens, Microsoft was among the earliest companies to integrate waveguide optics into a commercial AR headset. While current models use diffractive waveguides, Microsoft Research has published exploratory work on metasurface arrays and compact display engines. Strategic partnerships with military and enterprise clients make it likely that future HoloLens iterations will integrate thinner, lighter optical systems to improve wearability and operational longevity.

Startups and Innovators

While tech giants dominate headlines, a vibrant ecosystem of startups and research spin-offs is driving innovation in sub-millimeter waveguide technology.

Lumus

An Israeli optics company, Lumus has pioneered reflective waveguide technology and recently announced next-gen prototypes with reduced thickness and wider fields of view. The company claims its new optical engine, based on partial reflective mirrors and geometric waveguide folding, offers performance comparable to larger systems at half the thickness.

WaveOptics

WaveOptics is known for developing diffractive waveguides for AR wearables. Since its acquisition by Snap, the company has pushed toward more compact, scalable designs using hybrid fabrication methods. Rumors suggest WaveOptics is testing new sub-wavelength grating technologies for integration into Snap’s Spectacles lineup.

Dispelix

A Finnish company specializing in waveguide displays, Dispelix focuses on delivering full-color, high-efficiency waveguides for consumer-grade AR glasses. Recent press releases indicate their move toward metasurface and holographic element integration, aimed at reducing total optical stack thickness to under one millimeter.

Magic Leap

Having refocused its strategy toward enterprise markets, Magic Leap has emphasized optics as a key differentiator. The Magic Leap 2 features a more compact optical engine than its predecessor, and the company is reportedly exploring metasurface couplers and high-index materials for its future roadmap.

Research Institutions and Collaborations

Academic and government-funded institutions have also played a pivotal role in developing sub-millimeter waveguides. MIT’s Media Lab, Stanford’s Nano Shared Facilities, and the University of Central Florida’s CREOL center are actively advancing metasurface engineering and nanophotonic fabrication. These institutions often collaborate with industry via joint research agreements, bridging the gap between theory and application.

Government programs, including DARPA’s Modular Optical Aperture Systems (MOAS) initiative and the European Union’s Horizon framework, have allocated funding for sub-wavelength optics research, with several pilot programs focused on defense-grade AR systems using ultra-thin waveguides.

Prototypes and Product Roadmaps

Multiple companies have unveiled early prototypes that highlight the potential of sub-millimeter waveguide integration.

- Meta’s AR Glasses Concept (2024): Featured ultra-thin lenses with embedded nanophotonic elements; reportedly weighed under 70g with a field of view above 75°.

- Apple Smart Glasses Prototype (leaked): Claimed use of vertically stacked metasurface layers achieving sub-mm thickness and integrated eye-tracking.

- Snap Spectacles Developer Edition: Experimental model with reduced lens depth and improved clarity, suggesting ongoing tests of hybrid waveguides.

- Lumus Z-Lens: Publicly demonstrated a 0.8mm waveguide prototype with bright, full-color output and wide eye-box—targeting 2025 commercial release.

Although most of these products remain in development or limited release, their existence confirms that sub-millimeter waveguides are not speculative—they are actively shaping product decisions and competitive differentiation in the AR market.

The adoption of sub-millimeter waveguide technology is catalyzing a new wave of innovation across the AR ecosystem. From global tech giants to agile startups and academic labs, stakeholders are aligning around the promise of ultra-thin, high-efficiency optics as a foundation for the next generation of AR devices. The convergence of scalable manufacturing, material science breakthroughs, and cross-sector demand suggests that sub-millimeter waveguides are not a fleeting trend but a structural evolution in spatial computing.

Future Outlook: Toward Seamless, Everyday AR Integration

The introduction of sub-millimeter waveguide technology marks more than just an optical refinement—it signifies a fundamental shift in the trajectory of augmented reality (AR) integration into daily life. As AR glasses transition from niche enterprise tools to mainstream consumer devices, the vision of ubiquitous, context-aware computing increasingly depends on advances that merge utility with comfort, and performance with discretion. Sub-millimeter waveguides provide the essential infrastructure for this vision, enabling form factors that are socially acceptable, ergonomically sustainable, and technologically sophisticated.

This final section considers the long-term implications of sub-millimeter waveguides for consumer adoption, hardware evolution, integration with AI and other digital layers, and the broader redefinition of human-computer interaction.

Redefining the Wearable Computing Paradigm

The paradigm of wearable computing has long been constrained by the need to balance functionality with user comfort. Early smart glasses—often criticized for their bulk, awkward appearance, and limited field of view—failed to resonate with everyday users. Sub-millimeter waveguides resolve many of these longstanding issues, supporting the development of AR glasses that are lightweight, slim, and indistinguishable from standard eyewear.

In the near term, this shift will redefine what consumers expect from AR hardware. Just as smartphones evolved from bulky PDAs to sleek, pocket-sized devices that users rely on daily, AR glasses will increasingly adopt a minimalist aesthetic while becoming functionally indispensable. Whether used for turn-by-turn navigation, real-time translation, or ambient notifications, AR glasses enhanced by sub-millimeter optics will become less of a technological novelty and more of a natural interface for digital engagement.

Enhancing Multimodal Interfaces with AI

The fusion of ultra-thin optical systems with edge-based artificial intelligence presents one of the most compelling future pathways for AR. As waveguides shrink and displays become less intrusive, the role of voice, gesture, gaze, and contextual awareness will expand. Sub-millimeter waveguides, by minimizing the hardware footprint and optical distortions, enable seamless integration with eye-tracking cameras, neural interfaces, and AI inference engines located on-device or in the cloud.

This convergence allows for dynamic adaptation of visual content. For instance, a user’s gaze could trigger contextual information overlays—such as pricing data, background on a landmark, or real-time sentiment analysis during a conversation. Eye tracking combined with AI also supports foveated rendering, in which high-resolution images are projected only where the eye is looking, conserving processing power and battery life.

Moreover, as wearable AI agents become more capable of interpreting user intent, sub-millimeter waveguides will serve as the optical substrate through which machine intelligence is conveyed in real-time. Imagine a scenario in which a digital assistant silently projects discreet visual prompts in a user’s peripheral vision—reminders, alerts, or suggestions—without interrupting the primary visual experience. This style of ambient AR aligns with the broader movement toward more intuitive and less intrusive digital systems.

Expanding Applications Across Industries

The miniaturization of AR optical systems opens vast opportunities across industrial, medical, defense, and consumer domains. In industrial settings, lightweight AR glasses can provide engineers and technicians with real-time diagnostics, assembly instructions, or quality assurance feedback without requiring them to divert attention to separate displays. Because sub-millimeter waveguides can support high-brightness displays in compact form, such glasses are suitable for both indoor and outdoor environments.

In the healthcare sector, surgeons may use slim AR glasses to overlay medical imaging during procedures, with minimal discomfort during extended wear. In telemedicine, clinicians could access patient records, visualize scan data, or engage in remote consultations—all through a pair of glasses that feel like conventional eyewear.

In education, AR glasses that look and feel natural can transform classroom learning by delivering spatial, interactive content that complements traditional instruction. In military and emergency response scenarios, operators can benefit from secure, hands-free access to mission-critical information, navigation tools, and communication overlays, supported by rugged, low-profile designs enabled by thin waveguide optics.

Ecosystem and Software Implications

Hardware innovation alone cannot sustain a technology revolution; it must be supported by a robust ecosystem of software and services. The adoption of sub-millimeter waveguides will necessitate new tools for content development, 3D rendering, and spatial anchoring. Developers will need standardized APIs and design guidelines that account for the new visual parameters introduced by ultra-thin optics, such as expanded fields of view, varying focal planes, and minimal latency rendering.

Companies like Unity, Unreal Engine, and WebXR are already laying the groundwork for spatial computing platforms that can scale with hardware advances. Sub-millimeter waveguides will accelerate this development by enabling a wider deployment of AR-capable devices, expanding the addressable user base and incentivizing content creation.

Additionally, with improved design feasibility, we can expect app stores and open ecosystems for AR glasses to become more prominent, offering new business models akin to the smartphone era—where users can download personalized AR experiences and utilities tailored to their needs.

Ethical and Societal Considerations

As AR glasses become less distinguishable from regular eyewear, important discussions around privacy, consent, and data security will intensify. The unobtrusive nature of sub-millimeter optics could make it easier to record or transmit data without the knowledge of those nearby. Policymakers and developers alike will need to implement clear frameworks that govern usage, especially in sensitive environments such as schools, workplaces, and public spaces.

At the same time, the accessibility afforded by lighter and more affordable AR devices may close digital divides. Sub-millimeter waveguides reduce the cost and complexity of production over time, potentially enabling low-cost AR glasses for underserved populations. Used thoughtfully, this technology can democratize access to real-time knowledge, communication, and creativity.

Toward Transparent Computing

The culmination of these trends—miniaturized optics, AI integration, ergonomic comfort, and ecosystem maturity—leads to a future in which computing disappears into the background. This concept, often referred to as transparent computing, envisions a world where digital information is seamlessly integrated into the physical environment, available when needed, and invisible when not.

Sub-millimeter waveguides are foundational to this paradigm. By enabling AR glasses that are truly wearable, always connected, and socially unobtrusive, they remove the visual and physical friction that has long defined the boundary between user and machine. In doing so, they pave the way for computing experiences that are not only smarter but also more human-centered.

In conclusion, the future of augmented reality will be defined by how gracefully technology can integrate into our daily lives. Sub-millimeter waveguides, as a transformative optical innovation, are unlocking new possibilities for this integration by enhancing comfort, expanding visual fidelity, and enabling intelligent, adaptive interfaces. As the boundaries between the digital and physical worlds continue to dissolve, this technology will stand at the core of a future where information is no longer confined to screens—but embedded in the very fabric of our vision.

References

- Meta Reality Labs – Optical Research

https://tech.fb.com/inside-reality-labs-research-advances-in-optics-for-ar/ - Google Research – Optical Systems for AR

https://research.google.com/pubs/AR-optical-systems.html - Apple Patent Watch – Sub-Wavelength Waveguide Patents

https://patentlyapple.com/home/tag/Waveguides - Microsoft Research – HoloLens Optics Innovation

https://www.microsoft.com/en-us/research/project/mixed-reality-optics/ - Lumus – Waveguide Display Technology

https://lumusvision.com/technology/ - Snap Inc. (WaveOptics) – AR Display Development

https://waveoptics.com/technology-overview/ - Dispelix – Transparent AR Waveguides

https://dispelix.com/technology/ - MIT Media Lab – Nanophotonics and Metasurfaces

https://www.media.mit.edu/groups/nanophotonic-design/ - Stanford Nanofabrication Facility – Photonic Research

https://snf.stanford.edu/research/photonic-systems - CREOL (UCF) – Center for Research in Optics and Lasers

https://creol.ucf.edu/research/optics-and-photonics/