CoreWeave’s $1.5 Billion IPO: AI Cloud Challenger Prepares to Go Public

CoreWeave, a specialized cloud provider for GPU computing, is gearing up for a highly anticipated IPO around 2025. The company has rapidly grown from a 2017 crypto-mining startup into a major player in AI infrastructure. This post provides a comprehensive overview of CoreWeave’s business model and history, analyzes the upcoming IPO’s details and implications, compares CoreWeave to cloud giants and niche rivals, reviews its financial performance and funding, and examines the potential impact on the AI infrastructure market along with key risks.

Overview: From Crypto Miner to AI Cloud Powerhouse

CoreWeave’s journey began as a cryptocurrency miner. Founded in 2017 by Michael Intrator, Brian Venturo, and Brannin McBee (former commodities traders), the company initially operated under the name Atlantic Crypto mining Ethereum. As crypto markets cooled in 2018, CoreWeave pivoted toward cloud computing services, repurposing its fleet of graphical processing units (GPUs) to serve the burgeoning demand for AI and visual effects (VFX) compute power. This natural shift from crypto to cloud was driven by customer demand, as the GPUs acquired for mining proved invaluable for training machine learning models and rendering graphics. In the founders’ words, the company “came out of nowhere” onto the AI scene by leveraging resources it had already amassed.

Business Model: CoreWeave today is a cloud infrastructure provider specializing in GPU-accelerated workloads. It essentially allows organizations to rent GPU capacity in its data centers for tasks like AI model training/inference, large-scale simulations, and VFX rendering. Unlike general-purpose clouds, CoreWeave focuses only on high-performance computing for AI and similar fields – it doesn’t host generic enterprise apps or long-tail services. Its value proposition is providing on-demand access to the latest NVIDIA GPUs at lower cost and with greater flexibility than big cloud providers. CoreWeave often beats larger rivals to market with cutting-edge chips: it was among the first to deploy NVIDIA’s H100 (Hopper) GPUs and is even rolling out next-gen H200 and NVIDIA Grace Hopper (GH200) superchips ahead of others. In fact, CoreWeave was the first cloud to offer instances on NVIDIA’s Grace-Blackwell GB200 (Blackwell) platform (the NVL72 rack-scale system) for AI, showcasing a tight partnership with NVIDIA. NVIDIA has reciprocated by investing in CoreWeave and giving it priority access to new GPU supply. This close relationship ensures CoreWeave’s customers can access top-tier GPUs within weeks of release – the company claims it can deploy new chips in its servers just two weeks after receiving them from OEM partners.

Infrastructure Capabilities: Over a few years, CoreWeave built out an impressive distributed GPU infrastructure. As of late 2024, CoreWeave operates 32 data centers (primarily in North America and Europe) drawing 360 MW of power, hosting over 250,000 NVIDIA GPUs in total. This represents explosive growth in capacity – just one year prior in 2023, CoreWeave had ~53,000 GPUs across 10 sites, and in 2022 only ~17,000 GPUs in 3 sites. In other words, its GPU fleet quadrupled in 2023 alone to meet surging demand. Most of these GPUs are NVIDIA’s high-end Hopper architecture (A100/H100), with increasing volumes of Blackwell-generation GPUs being added in late 2024. CoreWeave’s software stack is custom-built to maximize GPU utilization and performance for AI workloads. It combines elements of cloud and high-performance computing: Kubernetes containers for multi-tenant isolation and easy deployment, coupled with a specialized job scheduler (“SUNK” – Slurm on Kubernetes) that allows multiple AI training or inference jobs to share clusters efficiently. Tools like Tensorizer enable fast loading of AI models from storage to GPU memory, minimizing idle time. Thanks to these optimizations, CoreWeave boasts it can achieve 20%+ better computational efficiency for AI jobs than “generic” clouds using the same hardware. In practical terms, this means more usable training throughput per dollar for customers. CoreWeave supports both containerized workflows and bare-metal GPU instances, giving AI developers flexibility to run custom environments or use CoreWeave’s managed Kubernetes service. While it lacks the myriad of ancillary services big clouds offer (databases, analytics tools, etc.), CoreWeave’s lean, GPU-focused platform is purpose-built for performance and rapid scaling of AI workloads.

The $1.5 B IPO Plan

Timing, Valuation, and Investors

After a period of frenzied growth, CoreWeave is now preparing to go public – marking one of the largest AI infrastructure IPOs to date. The company confidentially filed an S-1 registration with the SEC (revealed in early March 2025) and plans to list on Nasdaq under the ticker “CRWV”. Morgan Stanley, Goldman Sachs, and JPMorgan Chase are lined up to lead the offering, signaling a significant IPO in the making. While CoreWeave has not officially announced how much it aims to raise, early reports suggested an IPO target of around $1.5 billion. More recent disclosures hint the company may even seek up to $4 billion in new capital via the IPO – an ambitious figure that underscores high expectations for CoreWeave’s valuation.

CoreWeave’s private valuation has skyrocketed over the past two years, thanks to the AI boom. In 2023, a Series B funding round valued the company at $2.4 billion. By May 2024, CoreWeave raised a $1.1 billion Series C at a whopping $19 billion valuation. Just months later, in November 2024, a secondary share sale pushed the valuation to $23 billion. In other words, CoreWeave’s implied worth jumped nearly 10× in 18 months, reflecting investors’ frenzied bets on AI infrastructure. At a potential $30+ billion IPO valuation, CoreWeave would be valued at ~15× its 2024 revenue (which was $1.9 billion, per S-1) – a rich multiple that assumes continued hyper-growth.

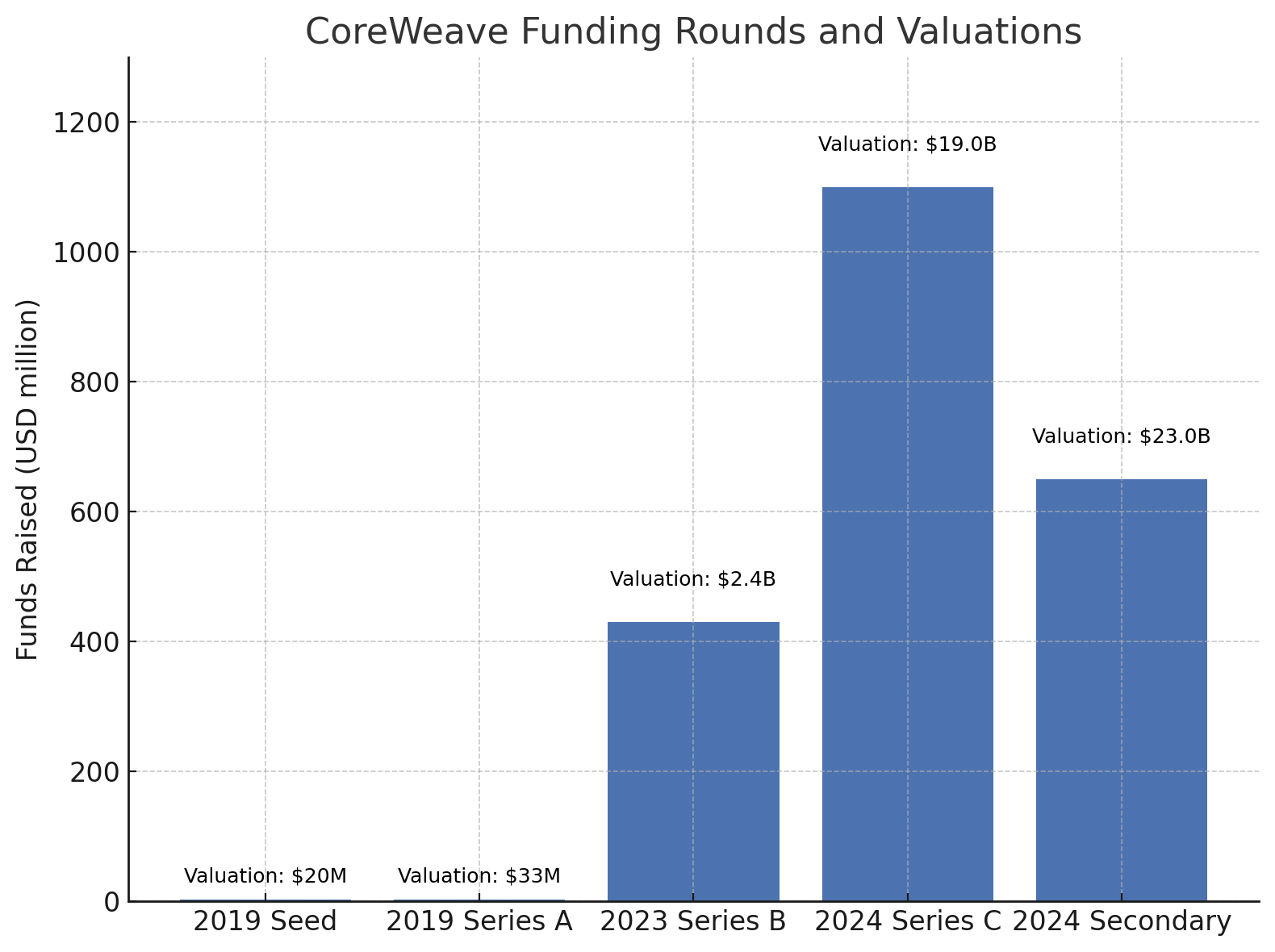

Key Investors: CoreWeave’s backers include an eclectic mix of venture funds, hedge funds, and strategic partners. The company’s funding history shows increasing participation from major investors over time (see chart below).

Chart: CoreWeave’s funding rounds from 2019 to 2024, showing capital raised (bars) and post-money valuation at each stage. Early rounds were small (seed at ~$20 M valuation), but by 2024 valuations hit the multi-billion range.

Early on, CoreWeave raised modest seed funding (around $3 M in 2019) at a ~$20 M valuation. Its Series B in 2023, however, brought in over $400 M and introduced heavyweight investors like Magnetar Capital and NVIDIA itself. By the $1.1 B Series C in 2024 (at $19 B valuation), investors included Coatue Management, Altimeter Capital, Fidelity and others. A late-2024 secondary round added names like BlackRock, Cisco Investments, and Jane Street to the roster. NVIDIA’s participation (albeit small in equity share) is strategically important – the chipmaker holds roughly 1% of voting stock post-Series C. Meanwhile, Magnetar (a hedge fund) holds about 7%. The three co-founders remain significant shareholders; CEO Michael Intrator alone retains ~38% voting control pre-IPO, thanks to founder-friendly share classes. Notably, Microsoft is not an equity investor, but has multi-year cloud contracts with CoreWeave (more on this below). In March 2025, OpenAI reportedly struck a deal to invest $350 M in CoreWeave alongside a massive compute procurement contract. This late development, coming just weeks before the IPO, led CoreWeave to amend its S-1 filing to reflect the new partnership with OpenAI. The OpenAI deal (valued at $11.9 B for CoreWeave’s services) both diversifies CoreWeave’s customer base and could influence IPO pricing – potentially boosting confidence in future revenue streams, while also raising questions about concentrated risk (OpenAI would become another very large customer).

IPO Timing and Implications: CoreWeave’s decision to go public in 2025 is timed to capitalize on intense investor appetite for AI plays. 2023 saw scant tech IPO activity, but 2024’s AI euphoria and CoreWeave’s jaw-dropping growth have set the stage for a blockbuster listing. If successful, CoreWeave’s IPO would inject substantial cash into the company. Management indicated proceeds will fund further data center expansion, fuel working capital (like buying yet more GPUs), and service its large debt load. Indeed, CoreWeave has accumulated nearly $10 B in debt financing since 2022 to finance infrastructure build-out. Going public would also give CoreWeave a liquid stock currency for potential acquisitions or partnerships in the cloud space. For the broader market, CoreWeave’s IPO is seen as a bellwether for AI infrastructure – a test of whether public investors believe the AI compute boom has durable momentum. A strong debut could validate the immense valuations placed on AI startups, while any stumbles might signal a more cautious outlook. NVIDIA, in particular, will be watching closely: as both an investor and primary supplier to CoreWeave, NVIDIA benefits from CoreWeave’s success (through GPU sales) but also faces exposure if CoreWeave falters. Finally, CoreWeave going public will shine a spotlight on its financials and risks (as detailed in the S-1), potentially prompting tougher questions from analysts than it faced in the private market. We turn to those financials next.

Financial Performance: Hyper-Growth Meets Heavy Losses

CoreWeave’s financial story is one of explosive revenue growth coupled with sizable losses. In the three years of ramp-up (2022–2024), CoreWeave’s revenue grew exponentially, reflecting its transition from a niche player to a major cloud provider:

- 2022: Revenue $16 M; Net Loss $31 M.

- 2023: Revenue $229 M (+1,331% YoY); Net Loss $594 M.

- 2024: Revenue $1.92 B (+737% YoY); Net Loss $863 M.

By 2024, annual revenue had soared to nearly $2 B as AI compute demand exploded. This staggering ~12× growth in one year came at the cost of continued high net losses (losses widened 45% in 2024). However, there is a positive trend in CoreWeave’s margins: gross margin reached 74% in FY2024, up from ~70% the prior year. In the fourth quarter of 2024, CoreWeave earned $747 M revenue with a 76% gross margin. Such high gross margins indicate that, excluding depreciation and overhead, the core cloud services are lucrative – the cost of operating GPUs (power, maintenance, personnel) is relatively low compared to what CoreWeave charges. The net losses stem largely from massive depreciation of GPU hardware investments and heavy operating expenses for expansion. In fact, one analysis noted that when accounting for depreciation, CoreWeave’s effective gross margin might be closer to 30%. Regardless, the improving gross margin suggests economies of scale and better utilization as CoreWeave scaled up.

To sustain its growth, CoreWeave has been hungry for capital. It raised billions in financing, both equity and debt, over 2022–2024 as shown earlier. The company’s funding history reveals how its valuation ballooned alongside the AI wave:

- 2019: Seed $3 M at ~$20 M valuation; later Series A $3 M at ~$33 M valuation.

- 2023: Series B $430 M (est.) at $2.4 B valuation, led by Magnetar; NVIDIA also joined this round.

- May 2024: Series C $1.1 B at $19 B valuation (investors: Coatue, Altimeter, Fidelity, etc.).

- Nov 2024: Secondary $650 M at $23 B valuation (investors: BlackRock, Cisco, Jane Street, and more).

Such rapid valuation uplift – from just $33 M in 2019 to $23 B in 2024 – is almost unprecedented. It mirrors the feverish demand for AI-focused companies in private markets. Importantly, CoreWeave also tapped significant debt financing to fuel its expansion. In May 2024, it secured a $7.5 B debt facility led by Blackstone and Magnetar, and later a $650 M credit line from JPMorgan, Goldman, and Morgan Stanley. Using debt to buy GPUs proved a strategic move: CoreWeave even used its own GPUs as collateral for loans – a creative way to leverage hardware assets to acquire more hardware. This leveraged growth strategy allowed CoreWeave to amass infrastructure quickly, but it also leaves the company with significant liabilities and interest obligations. By the end of 2024, CoreWeave still had about $1.36 B cash on hand, providing some cushion. Nonetheless, access to capital remains a risk: CoreWeave’s S-1 explicitly warns that difficulty raising additional financing could threaten its aggressive growth plans.

Another notable aspect of CoreWeave’s financials is customer concentration. Despite a growing client roster, a majority of revenue comes from just a few customers. In 2024, a single customer – Microsoft – accounted for 62% of revenue. The top two customers together were 77% of revenue. (By comparison, in 2022 no customer was over 16% of revenue.) This extreme dependence on a handful of clients is flagged as a serious risk in the IPO prospectus. Microsoft has effectively outsourced some of its AI computing needs (likely for services like OpenAI’s ChatGPT and GitHub Copilot) to CoreWeave, under multi-year contracts reportedly worth several billion dollars. While these contracts underpin CoreWeave’s rapid revenue growth, the company itself cautions that any negative change in demand or performance by Microsoft could “adversely affect our business [and] future prospects”. The risk is not merely theoretical: in early 2025, press reports claimed Microsoft had dropped some services from CoreWeave due to delivery delays, though CoreWeave denied this. Meanwhile, OpenAI’s new deal with CoreWeave adds another big-name client (and perhaps reduces Microsoft’s share of revenue), but it still concentrates business into very few hands. On the positive side, CoreWeave has begun serving multiple notable AI firms: it works with NVIDIA (as a customer), IBM, Meta, AI startup Mistral, Cohere, and others. Diversifying beyond Microsoft/Azure is clearly a strategic focus going forward.

In sum, CoreWeave’s financial profile is that of a hyper-growth, pre-profit company: booming revenues, improving gross margins, substantial net losses, and heavy reliance on continued big-ticket contracts and capital infusions. Investors in the IPO will weigh whether CoreWeave can eventually turn this growth into sustainable profits. There is a plausible path to profitability – one analysis suggests that if CoreWeave’s revenue growth slows to a more moderate pace (say, doubling in 2025 instead of 8×) and operating costs stabilize, the company could break even in a couple of years. Indeed, CoreWeave’s adjusted operating income margin was around 19% by end of 2024 on a run-rate basis, indicating improving efficiency. But reaching GAAP profitability will require continuing to fill its data centers with paying customers and maintaining pricing power as competition heats up.

Competitive Landscape: CoreWeave vs. Cloud Titans and GPU Specialists

As a relatively young company, CoreWeave finds itself competing on two fronts – against the “Hyperscalers” (massive cloud providers like Amazon Web Services, Microsoft Azure, and Google Cloud) and against other niche GPU cloud providers (smaller outfits focused on AI compute). Each set of competitors presents different challenges.

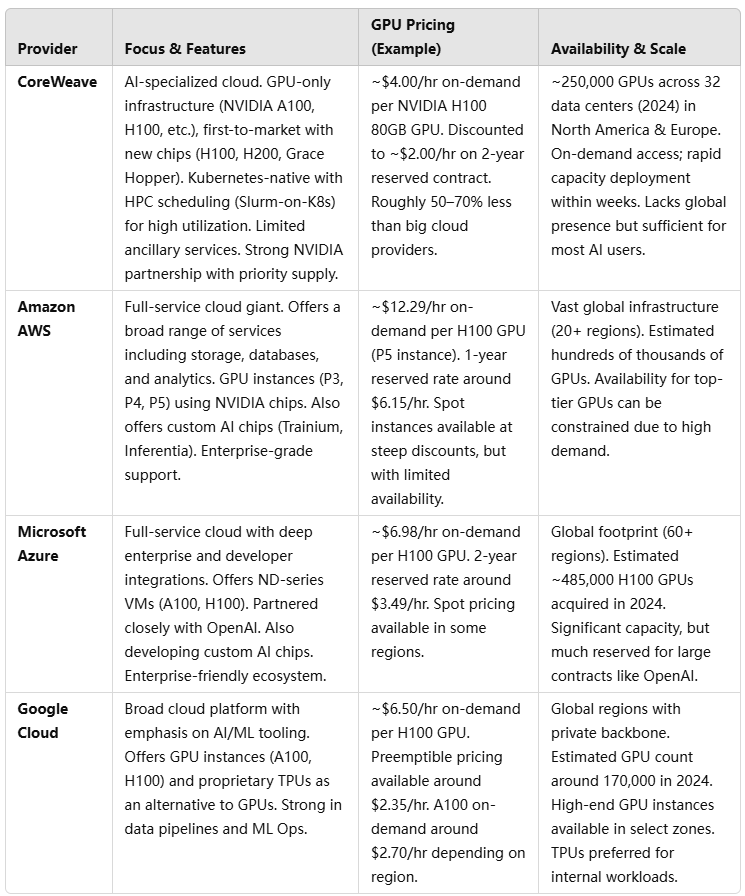

Against AWS, Azure, and Google Cloud: In the cloud arena, CoreWeave’s primary rivals are the big three public clouds. These giants have virtually unlimited resources, global reach, and deeply integrated ecosystems of services. However, CoreWeave has carved out a strong differentiator: a laser-focus on AI GPU capacity and a willingness to offer it cheaper and faster. Traditional clouds do offer GPU instances (e.g. AWS’s P4 and new P5 instances, Azure’s ND-series VMs, GCP’s A2 and A3 instances), but they “charge more per hour for the same H100 GPU simply because they can” – leveraging their market power and enterprise service offerings. For example, on-demand pricing for an NVIDIA H100 80GB GPU is around $12.29/hour on AWS and $6.98/hour on Azure, versus roughly $4.00/hour on a specialized GPU cloud like CoreWeave. In other words, CoreWeave can be 50–70% cheaper for equivalent hardware, which is a huge draw for cost-sensitive AI startups. Part of the reason is CoreWeave’s lean approach – it doesn’t bundle a fleet of extra services and premium support that big clouds offer, so its pricing reflects mostly the raw compute cost. Big providers also often require long-term commitments or reserved instances to get better rates, whereas CoreWeave emphasizes on-demand flexibility (while also offering discounts for longer reservations).

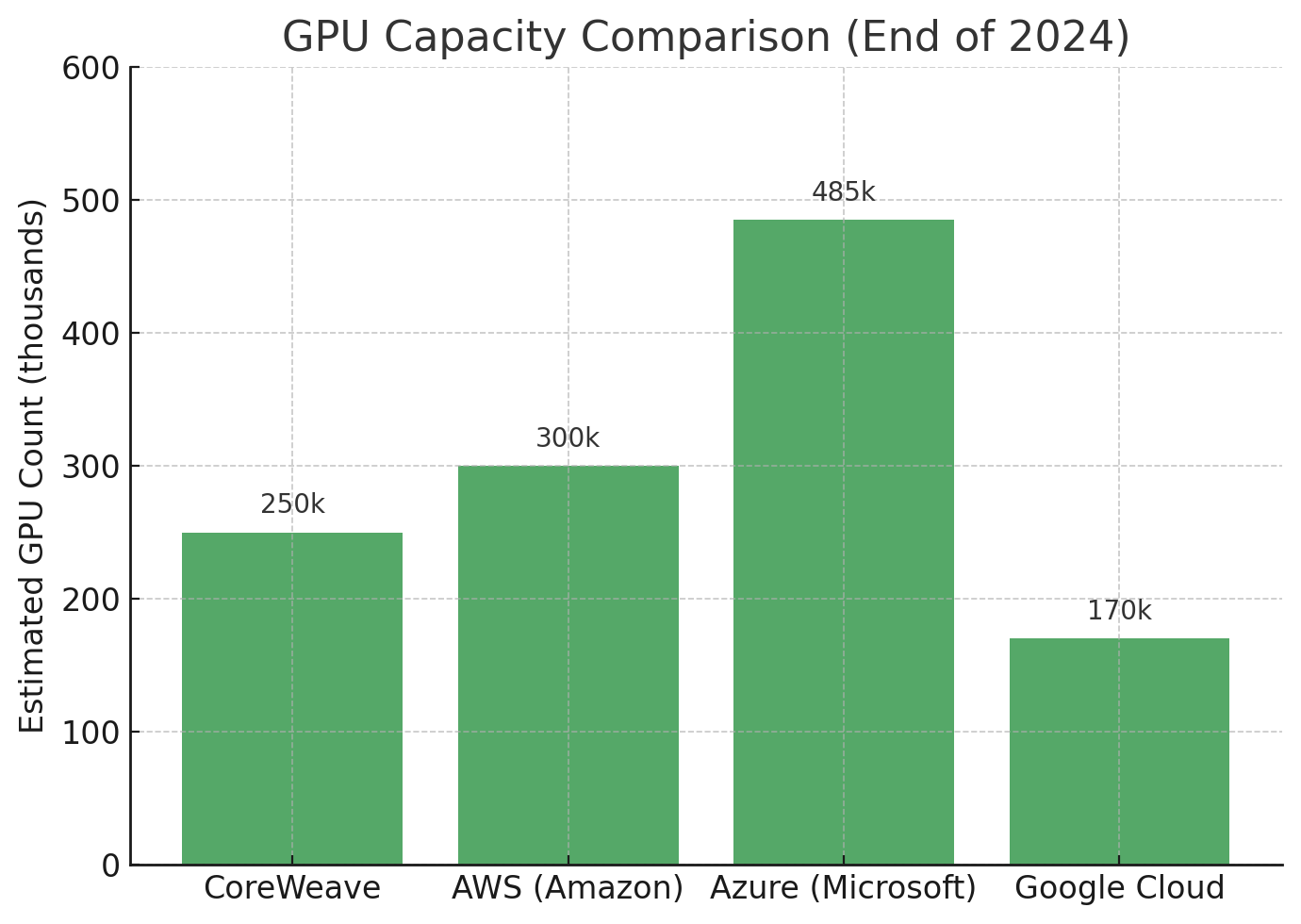

Another edge for CoreWeave is availability of the latest GPUs. The company’s close partnership with NVIDIA gave it first-mover advantage in deploying H100 GPUs in 2022–2023 and now Blackwell GPUs in 2024. In contrast, AWS and others had limited quantities of H100s initially – Azure’s massive orders only came online during 2024, and AWS’s H100-based P5 instances rolled out later in 2023. When high-end GPUs were scarce in the market, Microsoft itself turned to CoreWeave to fulfill capacity needs. This highlights a key competitive dynamic: the hyperscalers were capacity-constrained for cutting-edge AI chips in 2023–2024, whereas CoreWeave (with NVIDIA’s help) scaled up quickly to meet the GPU shortage. In effect, CoreWeave became a relief valve for the likes of Microsoft to handle surging AI workloads when their own clouds (Azure) ran short on GPUs. As of end-2024, CoreWeave’s 250k+ GPU fleet put it in the same league as the big players in terms of sheer hardware – a fact few would have imagined a couple years prior. To illustrate, Microsoft Azure had an estimated 485,000 Nvidia H100 GPUs on order in 2024, and Meta (though not a public cloud provider) was running on the order of 600,000 GPUs for its own AI R&D. Amazon and Google were estimated to have purchased ~196k and ~169k H100 GPUs respectively in 2024 (they also invest in their custom AI chips). CoreWeave’s quarter-million GPUs make it comparable to – if not larger than – Google Cloud’s GPU footprint, though still behind Azure’s dedicated AI builds and AWS’s total (including older generations). The chart below compares the approximate GPU capacity:

Chart: Estimated GPU counts (in thousands) at end of 2024 for CoreWeave and key cloud competitors. Azure (Microsoft) led with ~485k NVIDIA GPUs acquired in 2024, while AWS and Google had on the order of 200–300k each. CoreWeave’s fleet of ~250k GPUs made it comparable to a hyperscaler in raw capacity.

CoreWeave’s strong position, however, comes with limitations relative to the hyperscalers. The large clouds provide a far broader suite of services – from storage, databases, and serverless functions to enterprise support and compliance features – which many big customers require. CoreWeave’s offerings are more narrowly focused: if a client needs an integrated solution with AI training and data warehousing and analytics, AWS/Azure/GCP can bundle all that (at a higher cost), whereas CoreWeave would only handle the GPU compute portion. This means CoreWeave currently appeals most to customers who either have simple needs (just raw compute) or are technically savvy enough to mix-and-match providers (using CoreWeave for GPUs while getting other services elsewhere). Another challenge is trust and track record – AWS and Microsoft have long-standing enterprise relationships and reliability histories. CoreWeave is newer and smaller (though it has proven capable so far at running large-scale workloads for demanding clients).

The hyperscalers are also investing in alternatives to NVIDIA GPUs that could erode CoreWeave’s niche. Google has its TPU (Tensor Processing Unit) hardware, now in its 4th generation, which it offers as a cloud service for AI training that can outperform GPUs for certain models. Amazon has developed custom AI chips (Trainium for training and Inferentia for inference) offered at lower price points on AWS. If these proprietary accelerators gain adoption, some workloads might bypass the need for GPU rental entirely – a potential competitive threat to pure GPU clouds. Thus far, NVIDIA’s ecosystem remains dominant for most AI developers, but this is an area to watch.

In response to these strengths of incumbents, CoreWeave has emphasized simplicity and support for AI teams. It claims to cut out cloud complexity – “no need for dozens of services to configure, just access GPUs via our platform.” It has also built partnerships for storage (e.g. with Pure Storage’s FlashBlade systems) to provide high-performance data throughput to GPUs. Still, one industry observer noted that CoreWeave’s “limited service catalog” is a disadvantage – it lacks the extensive ancillary tools of giants. Over time, if CoreWeave succeeds, it may choose to expand its service offerings, but that could dilute its focus and cost advantages.

Versus Other GPU Cloud Providers: CoreWeave is not the only “GPU rental” upstart. A number of smaller companies and startups specialize in offering GPU instances for AI, often targeting specific niches or use-cases. These include Lambda Labs, Paperspace, RunPod, Vast.ai, Cirrascale, Oracle Cloud’s GPU services (Oracle is smaller than the big 3 but aggressively partnering with NVIDIA), and even NVIDIA’s own DGX Cloud (which actually runs on partner clouds like Oracle and Azure). Among these, Lambda Labs is a notable competitor: it started by selling physical GPU servers and later launched Lambda Cloud, offering on-demand V100, A100, etc., primarily catering to machine learning researchers. Lambda’s cloud is relatively small scale (limited GPU types and regions) but has a loyal following for its simplicity and multi-GPU configurations. Paperspace (acquired by DigitalOcean in 2023) offered easy-to-use GPU VMs, especially for individuals, but not at CoreWeave’s scale. RunPod and Vast.ai operate marketplace models where spare GPU capacity is rented out at low prices – even lower than CoreWeave – but with less reliability/consistency (since they often use consumer-grade GPUs or interruptible setups).

Compared to these, CoreWeave stands out for its scale and funding. It has tens of thousands of GPUs more than most boutique providers and far more capital to continue expanding. This has allowed CoreWeave to secure big enterprise contracts (like the Microsoft and OpenAI deals) that smaller rivals likely could not fulfill. For instance, Lambda Labs’ cloud was limited to a few GPU models and likely a few thousand GPUs in total – suitable for smaller deployments but not a multi-billion dollar contract. That said, many of these niche players compete on price for smaller users. Some offer H100 GPU rentals for as little as $2–3/hour (spot pricing or long-term). CoreWeave’s pricing at ~$4.00/hr on-demand is already aggressive relative to AWS, but the presence of these low-cost challengers means it can’t drastically raise prices either without customers exploring alternatives. In effect, CoreWeave is squeezed between bigger rivals with stickier services on one side and smaller rivals willing to undercut on price on the other.

How does CoreWeave maintain an edge? Primarily through its close NVIDIA partnership and massive scale, which give it preferential pricing on GPUs and the ability to serve large workloads. Additionally, CoreWeave’s engineering (like the aforementioned scheduling optimizations) can deliver better performance-per-GPU, which the low-cost marketplaces likely don’t match. Its strong funding also lets it offer favorable terms – for example, it can afford to extend credit or custom pricing for important clients, whereas a smaller player might not. Finally, CoreWeave has been willing to take on huge capital expenditures upfront (buying GPUs on debt) to grab market share quickly, a risky strategy smaller competitors might shy away from. This has established CoreWeave as the leading independent AI cloud provider outside the big three.

It’s also worth mentioning Oracle Cloud, which while a major tech company, is a kind of niche player in AI cloud because Oracle has positioned itself as an alternative for high-performance GPU workloads. Oracle’s cloud hosts NVIDIA’s DGX Cloud offering and has made deals with companies like Cohere (an AI startup) to provide GPU capacity. Oracle doesn’t disclose GPU counts, but it’s an example of a “second-tier” cloud provider trying to capture the AI wave. CoreWeave’s competition thus spans from giants to mid-sized clouds to startups.

The table below compares CoreWeave with AWS, Azure, and Google Cloud on key features, pricing, and availability of GPU services:

As shown above, CoreWeave competes primarily on cost and agility, whereas AWS, Azure, and GCP compete on breadth and deep pockets. An interesting facet is that the big clouds are also CoreWeave’s customers in some cases – a paradoxical relationship. Microsoft Azure’s reliance on CoreWeave as a client shows that even the hyperscalers sometimes act as partners or customers when it comes to sourcing GPU capacity. This blurs the competitive lines: CoreWeave isn’t so much displacing Azure’s business as supplementing it under the hood. However, that may be temporary. Microsoft is unlikely to depend on a third-party indefinitely if it can build out its own capacity (as it is rapidly doing). Google and Amazon, too, will ramp up supply or push their alternatives to avoid ceding too much demand to external GPU specialists.

CoreWeave’s emergence has been described as a “breath of fresh air” for enterprises hungry for AI compute. Customers who felt locked into slow or expensive contracts with the “800-lb gorilla” clouds now have an alternate vendor eager to please. In that sense, CoreWeave and similar firms are injecting competitive pressure into a market that was dominated by a few players. Amazon’s CEO has even acknowledged the rise of specialized providers, and it wouldn’t be surprising if AWS responds by adjusting its pricing or policies for AI instances to stay competitive (indeed, AWS has introduced savings plans and more flexible scaling options for EC2 instances recently). The competitive landscape remains dynamic – CoreWeave must keep scaling and innovating to stay ahead, while fending off both the giants (who can cut prices or bundle deals to win back workloads) and the upstarts (who undercut on niche segments).

Impact on the AI Infrastructure Market

CoreWeave’s rapid ascent and impending IPO carry broader implications for the AI infrastructure ecosystem.

Democratizing Access to AI Compute: Perhaps the most significant impact is improved access to large-scale compute for AI startups, research labs, and smaller companies. Not long ago, training a state-of-the-art AI model required either owning expensive GPU clusters or negotiating cloud contracts with hyperscalers – both high barriers for newcomers. CoreWeave’s model of renting GPUs on-demand (or short-term reserved) at lower cost lowers the entry barrier for AI innovation. Teams that struggled to get GPU quota on AWS or couldn’t afford Azure’s rates now have an alternative. This could accelerate AI development by enabling more experiments, more model training runs, and new entrants in the field. In essence, CoreWeave and similar providers are helping commoditize GPU compute: turning it into a readily available utility. There are parallels to how cloud computing in general democratized access to servers in the 2010s – now it’s happening specifically for high-end AI accelerators. Over $15 billion in contracted future GPU usage was on CoreWeave’s books as of its IPO filing (remaining performance obligations), indicating that many companies have locked in multi-year commitments – a sign that AI compute is being treated as a long-term need in many industries, not just a one-off experiment.

Competitive Pressure Leading to Better Pricing: With CoreWeave in the mix, the big providers will likely be pressured to adjust pricing or terms for AI infrastructure. We may see more competitive pricing for GPU instances on AWS, Azure, and GCP if CoreWeave keeps luring away big contracts. In fact, industry analysts expect the “pricing spread” – the gap between big cloud prices and specialist prices – to diminish over time as the hyperscalers’ pricing power erodes. Customers, especially large AI firms, will negotiate harder now that they have viable alternatives. This could result in cost savings for AI companies across the board, though it may pinch cloud providers’ margins. Already, Microsoft’s huge orders of GPUs and Google’s investments in TPUs are partly aimed at ensuring they can offer capacity at scale without driving customers to third parties. NVIDIA’s interests are served by a competitive cloud market – more competition means more aggregate demand for its chips. Indeed, NVIDIA CEO Jensen Huang has remarked that it’s beneficial to have many “AI clouds” rising, not just the usual suspects.

Innovation and Specialization: CoreWeave’s success validates a trend of infrastructure specialization. Rather than every cloud trying to do everything, we see the emergence of clouds optimized for specific workloads (AI in this case). This could spur further innovation in how cloud infrastructure is designed. For example, CoreWeave’s integration of Slurm (an HPC scheduler) with Kubernetes is an innovative approach blending cloud flexibility with supercomputer-like efficiency. If it indeed yields 20% better throughput on AI jobs, competitors might adopt similar techniques. We might also see more vertical clouds: perhaps similar concepts for other domains (imagine a cloud optimized for blockchain, or for scientific computing, etc.). For the AI domain, CoreWeave’s growth could encourage others to invest in new hardware types or software stack improvements to differentiate further – e.g., companies like Cerebras (with its AI wafer-scale chip) partner with cloud services to offer novel options beyond GPUs.

Market Structure and Customer Choice: The AI compute market might shift from being an oligopoly (just a few large clouds) to a more competitive landscape with several significant providers. In addition to CoreWeave, startups like Lambda, Paperspace, and Phoenix (in Europe) are expanding, and big cloud-alternative players like Oracle or IBM Cloud are vying for AI workloads. More competition generally means customers can adopt a multi-cloud strategy to optimize for price and performance – e.g., train models on CoreWeave, deploy inference on AWS, etc. This could reduce the fear of vendor lock-in that has concerned some AI firms. For instance, OpenAI initially tied itself closely to Azure; now, with OpenAI also engaging CoreWeave, it diversifies its compute suppliers, which could improve its negotiating leverage and redundancy.

Scale and Supply Chain Effects: CoreWeave scaling up to hundreds of thousands of GPUs also has implications upstream in the supply chain. NVIDIA’s revenue has been supercharged by such orders; if CoreWeave and peers continue to build out, it provides steady demand for GPU manufacturers. It also means power and data center real estate are in high demand – CoreWeave has contracted 1.3 gigawatts of power capacity for its data centers (360 MW active by 2024). The AI compute boom is driving massive data center construction, benefiting infrastructure providers (colocation, power, cooling industries). However, if multiple players (big and small) are all hoarding GPUs, there is a risk of short-term oversupply if demand ever pauses. For now, though, most observers believe we’re in an AI compute undersupply phase – evidenced by the backlog of orders and unmet appetite for GPUs in 2023.

Changes in AI Software Development: As compute becomes more accessible, we could see faster progress in AI models and possibly more diversity in development. When only a few tech giants had the compute to train frontier models, progress was concentrated. Now startups can access tens of thousands of GPUs via CoreWeave to train their own large language models (LLMs) or image generators. For example, Stability AI (behind Stable Diffusion) reportedly leveraged CoreWeave’s cloud for some of its needs. More accessible compute might lead to more competition in AI models, which is healthy for the ecosystem (though it also could mean duplication of efforts). It can also drive the price of AI inference down, potentially making AI services cheaper for end-users.

On the flip side, if CoreWeave’s model leads to a glut of capacity in a few years, it might trigger a price war. AI compute could become a low-margin utility if too many players fight for customers. Some analysts already caution that today’s high margins (for both NVIDIA and GPU cloud providers) might not last once supply catches up with demand. In a scenario where GPUs are abundant, larger clouds could drastically cut prices (since they have other revenue streams to subsidize), potentially squeezing pure-play providers. So, CoreWeave’s impact could be two-edged: in the short term democratizing and energizing the market, in the long term contributing to commoditization of AI compute.

Risks and Challenges Ahead

Despite CoreWeave’s impressive trajectory, there are significant risks associated with its business model and with the upcoming IPO. Potential investors and observers should weigh these factors:

- Customer Concentration & Dependency: As discussed, CoreWeave’s revenue is highly concentrated. Microsoft was 62% of 2024 revenue, and with OpenAI’s new deal, a huge portion of future revenue will tie to these two related entities (since Microsoft is a major OpenAI partner). This is a classic risk: if for any reason Microsoft or OpenAI reduce their usage (e.g. Microsoft builds enough internal capacity, OpenAI develops its own datacenters, or they switch to another provider), CoreWeave could face a sudden revenue shortfall. Microsoft’s AI strategy could shift – it might integrate OpenAI’s models more into Azure’s own cloud or optimize software to use fewer GPUs. CoreWeave openly warns that any “negative changes in demand from Microsoft… or in our broader strategic relationship with Microsoft” would hurt results. The recent OpenAI stake complicates this: Microsoft might be less inclined to cut ties knowing OpenAI is now directly leveraging CoreWeave, but it could also mean Microsoft will carefully evaluate how much it relies on an external provider versus bringing that workload back in-house in the future.

- Sustainability of Growth and Valuation: CoreWeave’s valuation (north of $20 B privately) assumes extraordinary growth and eventual profitability. To justify a rumored ~$35 B IPO market cap, CoreWeave might need to generate on the order of $1.5 B in EBITDA in the future. Currently it has negative EBITDA. The implicit bet is that revenue will continue climbing steeply and that losses will turn to profits as utilization improves and costs flatten. However, one must ask: how much of CoreWeave’s growth was fueled by the unique GPU shortage of 2023? That shortage will not last indefinitely. Supply is catching up – NVIDIA and others are ramping production, and by 2025–2026, the market could flip to oversupply. If GPU prices (and cloud rental rates) fall, CoreWeave’s revenue growth might slow or even reverse unless it finds many more customers to offset price declines. Already, prices for renting slightly older GPUs (like last-gen H100 vs newest Blackwell) are expected to drop sharply. CoreWeave has a lot of H100 assets that could see lower utilization or pricing once Blackwell GPUs become widely available (since many clients will prefer the faster chip). It will have to continuously invest in newer hardware – an expensive proposition – while possibly writing down older equipment faster than planned. This dynamic raises the specter of cloud computing commoditization: if GPUs become a commodity service, margins could thin out like “commodity-like margins” in other rentals. CoreWeave’s hype-fueled valuation could face a reality check if, say, growth moderates to more normal levels (e.g. 50% year-over-year instead of 700%). The company will need to demonstrate it can win business beyond the current gold rush and operate efficiently at scale.

- Capital Intensity and Financial Risk: CoreWeave’s expansion requires enormous capital outlay – purchasing GPUs, building data centers, etc. It has taken on ~$10 B in debt commitments. Rising interest rates or tightening credit markets could strain its finances, as interest expense adds to operating losses. While NVIDIA has been supportive (possibly with vendor financing or deferred payment deals), that may not cover all needs. If public market sentiment changes and CoreWeave cannot raise additional capital (equity or debt) when needed, it might have to sharply cut back growth plans or even scale down operations – a risk the S-1 acknowledges. Moreover, depreciation of hardware is a major expense: GPUs might depreciate faster if newer models obsolete them (we see this with Blackwell on the horizon). There’s a risk of inventory write-downs or shorter useful life assumptions that could worsen accounting losses. CoreWeave must manage a delicate balance: grow fast enough to meet demand and utilize its massive capacity (to generate revenue) but not over-extend such that hardware sits idle. If the AI boom were to cool unexpectedly (for example, if end-customer adoption of AI slows), CoreWeave could be left with overcapacity – a costly burden.

- Competition and Margin Pressure: The competitive landscape we explored is itself a risk. The hyperscalers could decide to significantly undercut CoreWeave’s pricing for key clients or bundle AI compute with other lucrative contracts, making it hard for CoreWeave to win deals. They could also invest in technology that reduces customers’ reliance on external GPUs (for instance, improved efficiency so that a client needs fewer total GPU hours, or promoting their own chips like TPUs that CoreWeave cannot offer). On the niche side, if another competitor comes up with an even more cost-efficient model (say, using second-hand GPUs, or a new low-cost architecture), CoreWeave might lose its cost leadership. Essentially, barriers to entry in renting GPUs are not huge – many small companies do it, though not at CoreWeave’s scale. If margins stay attractive, more entrants will appear. Already, some AI startups and even VCs are exploring hosting their own GPU clusters (or forming cooperatives) rather than paying third-party fees. CoreWeave could see its larger customers eventually develop enough scale to go direct – akin to large cloud users sometimes choosing to “repatriate” workloads to their own datacenters for cost savings. OpenAI’s “Project Azure Orbital” (formerly rumored as “Stargate”) is reportedly an effort to design its own AI supercomputers, which could lessen long-term dependence on Azure or CoreWeave.

- Reliance on NVIDIA (Single-Supplier Risk): CoreWeave’s entire hardware ecosystem is built on NVIDIA GPUs (and related networking like NVLink, etc.). NVIDIA currently enjoys a near-monopoly on high-end AI chips. If something were to disrupt NVIDIA’s production (e.g. supply chain issues, export restrictions) or if NVIDIA changed its business strategy (for instance, offering more cloud services itself, thus competing with customers), CoreWeave could be severely impacted. To be fair, NVIDIA benefits from selling to players like CoreWeave, so it’s incentivized to keep supplying them. But one concern is pricing power – NVIDIA has been raising chip prices given the immense demand, which could squeeze CoreWeave’s margins if not passed on. Also, if a credible GPU alternative emerges (say, AMD MI300 accelerators or new AI chips from Intel or startups), CoreWeave might need to adapt quickly to offer those, or risk clients switching to providers that do. Being tied to one vendor’s roadmap is a risk if that vendor stumbles or competitors catch up.

- Regulatory and Geopolitical Risks: The AI and cloud sectors are increasingly drawing regulatory scrutiny. While most regulations target AI software (ethics, data, etc.) rather than infrastructure, there are areas of concern. For example, export controls: U.S. regulations now restrict sale of top-tier NVIDIA GPUs (like certain A100/H100 models) to China and other regions. CoreWeave as a U.S.-based provider must comply – this could limit its market if it ever wanted to expand services to certain foreign clients. There’s also a general risk that governments could impose rules on cloud providers for security (ensuring isolation, etc. – though that tends to affect hyperscalers dealing with sensitive data). Another angle is environmental regulation: massive GPU farms consume a lot of energy. As CoreWeave scales, it may face pressures or requirements to use green energy or improve efficiency, which could increase costs. Data center construction and operations might be subject to more permitting hurdles related to power usage effectiveness (PUE) and heat emissions.

- Uncertain Trajectory of AI Demand: CoreWeave’s S-1 even notes the “uncertain trajectory of AI and its commercialization” as a risk. The AI hype cycle could face setbacks – for instance, if companies don’t see expected returns on AI investments, they might pull back spending. CoreWeave is essentially riding the wave of AI adoption. Any slowdown in AI research funding or a plateau in model improvement enthusiasm could translate to slower growth in cloud demand. Additionally, if end-users push back on AI (due to privacy or regulatory reasons), some AI projects might be scaled down. While this risk seems low in the near term (AI investment remains strong as of 2025), technological fields can evolve in non-linear ways. A breakthrough in AI efficiency (needing far less compute to achieve the same results) could reduce demand for brute-force GPU power. CoreWeave even cites the risk of customers failing to find viable use cases or sufficient user adoption for their AI products, which in turn would hurt demand for CoreWeave’s compute.

- Execution and Operational Risk: Rapid growth companies often face execution challenges: scaling support, maintaining reliability, and managing a quickly growing team (CoreWeave went from a small operation to presumably hundreds of employees now). Any significant cloud outages or service issues could damage its reputation with the exact customers it can’t afford to lose. The S-1 “warts and all” disclosure hints at some weaknesses – for example, there were reports of delivery issues and delays in some cases. Also, founder control is very high (the founders still have ~82% voting power via super-voting shares). This means new investors will have little say in governance, and if leadership makes missteps, external shareholders might be unable to course-correct easily (similar to how WeWork’s founder control was concerning). The founders even took notable liquidity in 2024 (cashing out ~$500 M via secondary sales), which could be viewed as them having already secured personal gains ahead of public investors – not necessarily a risk, but something to be aware of in terms of incentives.

In summary, while CoreWeave’s story is compelling – a fast-growing David challenging Goliaths in the AI cloud race – it is not without peril. The IPO will provide a test of how these risks are perceived. A successful public debut could give CoreWeave the resources (and credibility) to mitigate some risks: e.g., pay down debt, invest in diversification of customer base, and continue innovating. On the other hand, once public, CoreWeave’s valuation will be subject to quarterly scrutiny. If it fails to meet the lofty growth expectations, its stock could be volatile, which in turn can affect employee morale and investment capacity.

Conclusion: A Pivotal Moment for AI Cloud Computing

CoreWeave’s upcoming IPO will mark a milestone in the evolution of AI infrastructure. In a few short years, the company has transformed from an obscure crypto mining outfit into a linchpin of the AI boom, supplying compute power to some of the world’s leading AI endeavors. A successful IPO would not only validate CoreWeave’s business model but also signal confidence in the continued expansion of AI-centric cloud services. Investors and tech observers are watching closely because CoreWeave’s fate will reflect broader trends: the balance of power between specialized upstarts and tech giants, the sustainability of the AI arms race, and the financial viability of infrastructure-first strategies.

If CoreWeave thrives post-IPO, it could usher in a more diversified cloud market where bespoke providers coexist with hyperscalers, each driving the other to improve. AI researchers and startups would benefit from abundant, affordable compute – fueling the next wave of innovations, from more capable language models to breakthroughs in science and medicine enabled by AI. On the other hand, CoreWeave will need deft navigation to avoid pitfalls like price wars, over-expansion, or losing key clients. It stands at the intersection of opportunity and risk: the opportunity to become the go-to platform powering an AI revolution, and the risk of being squeezed by the very forces it helped unleash (increased competition and normalizing hardware supply).

For now, CoreWeave appears to be a step ahead of the pack in independent AI infrastructure. Its IPO will provide fresh capital to keep that edge and increase transparency into its operations. The $1.5 billion (or greater) raised will help write the next chapters of its story – whether that is one of continued exponential growth or a sobering adjustment to market realities. Either way, CoreWeave has already indelibly changed the narrative in cloud computing: proving that in the era of AI, even the mightiest clouds can be challenged by a focused, fast-moving contender offering what every AI practitioner wants – more GPUs, here and now.

References

- CoreWeave – Official Website

https://www.coreweave.com - CoreWeave Confidentially Files for IPO

https://www.bloomberg.com/news/articles/2025-03-05/coreweave-confidentially-files-for-ipo - NVIDIA’s Bet on CoreWeave: GPU Supplier and Investor

https://www.cnbc.com/2024/11/17/nvidia-invests-in-coreweave-as-ai-demand-surges.html - The Rise of CoreWeave: From Crypto to AI Cloud

https://www.theverge.com/2024/12/05/coreweave-crypto-ai-infrastructure - CoreWeave S-1 Filing Analysis

https://techcrunch.com/2025/03/06/coreweave-ipo-s1-breakdown - AI Cloud Wars: CoreWeave vs. AWS, Azure, GCP

https://www.protocol.com/cloud/coreweave-vs-aws-azure-gcp - Inside CoreWeave’s Deal with Microsoft

https://www.businessinsider.com/microsoft-coreweave-ai-deal-gpu-contracts - CoreWeave and OpenAI Strike $11.9 Billion Deal

https://www.theinformation.com/articles/openai-inks-11-9b-deal-with-coreweave - AI Infrastructure Boom: Why Everyone’s Chasing GPUs

https://www.wired.com/story/ai-infrastructure-coreweave-gpu-boom - CoreWeave’s Secret Weapon: Slurm on Kubernetes

https://www.nextplatform.com/2024/10/30/coreweave-slurm-kubernetes-optimization - Cloud Pricing Wars: GPU Rental Rates Compared

https://lambdalabs.com/blog/gpu-cloud-pricing-2024 - Who’s Buying the Most H100s? GPU Market Report

https://omdia.tech.informa.com/AI/ai-gpu-market-tracker - How CoreWeave Scaled 10x in a Year

https://www.datacenterdynamics.com/en/news/how-coreweave-scaled-10x-in-12-months - AI Compute Supply Chain Risks

https://semiengineering.com/ai-infrastructure-supply-chain-challenges - CoreWeave: Investor Deck and Financials Breakdown

https://pitchbook.com/news/articles/coreweave-ipo-financials-investor-breakdown