COBOL in Financial Systems: Past, Present, and Future

COBOL, a programming language over 60 years old, remains deeply entrenched in the world’s financial infrastructure. Banks, insurance companies, and government institutions worldwide still run critical operations on COBOL-based systems developed decades ago. This enduring reliance contrasts sharply with the modern IT landscape of cloud services and microservices. Financial IT leaders today face a paradox: how to balance the stability of proven COBOL systems with the demands of digital transformation and a looming skills shortage. This article explores the historical journey of COBOL, why it became dominant in financial services, the scale of its continued use, and the challenges of maintaining such legacy code. We will examine strategies for modernization – from refactoring code and replatforming off mainframes to full system replacements and API wrapping – along with real case studies of institutions that have attempted these transformations. We’ll also discuss the critical role COBOL still plays in mainframe environments and the risks of doing nothing. Finally, we consider the future outlook: whether financial organizations will continue maintaining COBOL, migrate to new platforms, or eventually retire these systems. The goal is to provide IT managers and financial executives a comprehensive understanding of COBOL’s past, present, and future in the industry, helping inform strategies for addressing this legacy technology challenge.

Historical Background and Evolution of COBOL

COBOL (Common Business Oriented Language) was created in 1959 as one of the earliest high-level programming languages for business applications. It emerged from a U.S. Department of Defense initiative to develop a portable, English-like language for data processing across different computer systems. Pioneers like Grace Hopper influenced its design (COBOL’s syntax drew from her FLOW-MATIC language), making COBOL highly readable and resembling plain English. This readability was intentional – the language was meant to be used by businesses and government, so its syntax uses words like MOVE, ADD, and DISPLAY that non-programmers could almost understand.

By 1960, COBOL programs had been successfully demonstrated on multiple computer platforms, proving that code could be ported between different vendors’ machines. This portability was groundbreaking in a time when each hardware manufacturer often had its own unique language. Throughout the 1960s and 1970s, COBOL rapidly became the standard for commercial and financial software development. The language evolved through ANSI and ISO standards: COBOL-68, COBOL-74, and COBOL-85 introduced improvements such as structured programming and better file handling. The core strength of COBOL was its facility with business data processing – handling large volumes of records, transactions, and formatted data (like reports and invoices) with ease. Its fixed-point arithmetic was ideal for financial calculations, ensuring accuracy in currency computations down to the cent.

By the 1980s, COBOL systems were ubiquitous in banks and insurance firms, powering everything from core banking ledgers to insurance policy management. Tens of thousands of COBOL programmers (often termed “COBOLers”) wrote and maintained these mission-critical systems. Predictions of COBOL’s demise have surfaced regularly since at least the 1970s, yet the language endured. One reason is backward compatibility: new COBOL compilers could still run programs written 20 or 30 years prior with minimal changes. This stability meant organizations saw little need to rewrite systems in newer languages if the COBOL code continued to work. Instead, they kept extending and updating their COBOL codebases. Over time COBOL incorporated some modern features (for example, object-oriented COBOL was added in the 2002 standard), but in practice most financial institutions stick to structured procedural COBOL for their needs.

Why COBOL Became Dominant in Financial Services. In the early decades of computing, COBOL achieved dominance in financial services for several key reasons. First, it was designed for business use-cases from the start, making it well-suited to banking and insurance requirements. COBOL excelled at batch processing – executing high-volume daily jobs such as interest calculations or batch updates to accounts – and it handled transaction processing reliably. Banks found that COBOL’s syntax and data structures (like its record definitions and file handling) fit the needs of common banking tasks (e.g. processing checks or account statements) far better than scientific languages of the time. Second, the support of major vendors like IBM cemented COBOL’s role. IBM’s mainframe systems in the 1960s and 1970s came with COBOL compilers, and IBM actively promoted COBOL for commercial applications. As IBM became the backbone of banking IT, COBOL rode that wave. A COBOL program written for an IBM System/360 mainframe in 1970 might still run on an IBM Z mainframe in 2025 with minimal modification – this level of continuity built enormous trust in COBOL systems’ longevity.

Another factor was standardization and training. COBOL was taught widely in universities and company training programs during the 1970s-1980s as the language for business programming. Thus a generation of programmers and IT managers grew up with COBOL as a core skill, reinforcing its use. The saying “Nobody gets fired for buying IBM” also had a parallel in software – choosing COBOL for a bank system was the safe, standard choice. Over decades, financial institutions invested hundreds of millions of dollars in developing robust COBOL systems. By the time newer languages emerged (C, C++, Java, etc.), banks had so much intellectual property and critical logic embedded in COBOL that replacing it was both technologically daunting and financially unjustifiable. COBOL had effectively created a high barrier to exit: it worked reliably, and rewriting it entailed significant risk.

Finally, COBOL’s reliability and performance played a role in its dominance. It earned a reputation for being a “workhorse” language – not elegant or cutting-edge, but dependable. COBOL programs could run for decades with only minor tweaks. In the conservative culture of finance, where errors can cost millions and outages can halt commerce, this dependability was paramount. COBOL systems achieved extremely high uptime and data integrity. This track record made executives confident in COBOL. In contrast, newer technologies often had unknown failure modes or security issues. Thus, COBOL became deeply entrenched as the backbone of financial IT systems by the end of the 20th century.

The Scale of COBOL’s Footprint in Finance Today

Far from fading away, COBOL remains widely used in 2025. The scale of COBOL code still running in production worldwide is enormous. A Reuters report in 2017 estimated there were over 220 billion lines of COBOL code in active use, and that 43% of banking systems relied on COBOL. By some measures, COBOL still powers the majority of transactions in the financial sector. For example, as of the late 2010s, 80% of in-person financial transactions (such as in branch offices) involved COBOL, and 95% of ATM swipes triggered COBOL code on the backend. These astonishing figures underscore that whenever a customer withdraws cash from an ATM or a teller updates an account, chances are a COBOL program is doing the work behind the scenes.

Even more striking, recent surveys suggest the COBOL codebase is not shrinking – it’s growing. A 2022 industry survey found over 800 billion lines of COBOL in use, more than triple the earlier estimates. This growth is partly because organizations continue to develop and extend existing COBOL systems (adding new features or regulations), and also because more legacy code was “unearthed” and counted. In many large banks, COBOL applications that started in the 1970s have been continuously expanded year after year, so the codebase accumulates like sedimentary layers. One survey of IT leaders showed nearly half of respondents expected their COBOL usage to increase over the next year – indicating that modernization efforts often involve enhancing COBOL systems rather than replacing them outright.

The financial services sector is the primary stronghold of COBOL. Mainframe COBOL systems process an estimated $3 trillion in commerce every day in the banking industry. Core banking systems (for deposits, loans, credit card processing, etc.), insurance policy administration, trade settlement systems, mortgage servicing – many of these run on COBOL code. In insurance, for instance, large insurers have COBOL-based policy management systems that handle everything from issuing new policies to billing and claims. Governments also heavily rely on COBOL: a U.S. Government Accountability Office (GAO) report in 2016 noted COBOL systems at agencies like the Department of Veterans Affairs, Department of Justice, and Social Security Administration. A 2019 GAO update added Treasury, Education, and others to the list. Many state governments use COBOL for unemployment insurance and benefits systems – in fact, as of 2020, unemployment programs in 30+ U.S. states were at least partially run on COBOL systems.

What this translates to is a vast installed base of critical software that cannot easily be switched off. COBOL applications often run on IBM mainframes (or compatible systems) which are known for handling huge transaction volumes with high reliability. For example, IBM estimates that 92 of the world’s top 100 banks still use IBM Z mainframes as their core computing platform – and these mainframes predominantly run COBOL and COBOL-derived workloads. These systems handle 90% of all credit-card transactions globally and store roughly 80% of all corporate business data. In essence, COBOL on mainframes is the unseen engine behind a large portion of global finance and commerce. It might be a 60-year-old engine, but it’s one that has been finely tuned over time.

To appreciate the reliance on COBOL, consider daily life: when you transfer money between bank accounts, pay a bill, or get paid via direct deposit, chances are a COBOL program on a bank mainframe executes that transaction and updates the records. When an insurance company processes your claim, COBOL code likely crunches the data and issues the payment. Even booking an airline ticket or a government issuing social security checks often involve COBOL somewhere in the background. The continued presence of COBOL in these processes is a testament to how deeply integrated it is in back-end infrastructure. The global economy has COBOL as one of its foundational technologies – albeit one that is invisible to consumers and even to many front-end application developers.

Challenges of Legacy COBOL Systems

While COBOL systems have served financial institutions well for decades, they present a growing array of challenges as they age. These legacy codebases and the environments they run on are increasingly seen as both a technical and business hurdle that must be addressed. Key challenges include maintainability, a shrinking talent pool, outdated documentation, integration difficulties, and regulatory pressures.

- Maintainability and Technical Debt: Many COBOL programs in use today were written 30-50 years ago and have been modified by dozens of programmers over time. The result is often a tangled, monolithic code structure with modules that have been patched and re-patched. The concept of technical debt looms large – shortcuts or quick fixes from decades past now make the code harder to change. Adding a new feature or changing a business rule in a COBOL system can be risky because a seemingly simple change might have ripple effects across a complex batch job or transaction flow. Moreover, some of these systems use archaic data stores (like flat files or hierarchical databases from the 1970s) that modern developers find cumbersome. The COBOL language itself, while robust, is verbose and not object-oriented (except in newer standards rarely used in practice), which can make implementing modern algorithms or structures awkward. All this means maintenance tasks that would be trivial in a modern language can take substantially longer in COBOL. Nonetheless, the systems must be maintained. As long as core business logic remains on COBOL, banks must invest in keeping the code correct and compliant with current needs.

- Skills Gap and Workforce Retirement: Perhaps the most publicized challenge with COBOL is the dwindling pool of experienced COBOL developers. The generation of engineers that built these systems is retiring. By 2019, industry reports were already warning that the number of COBOL-proficient programmers was “shrinking fast” due to retirements. Unlike newer programming fields, there are relatively few young developers choosing to learn COBOL. Universities today emphasize languages like Python, Java, or JavaScript; COBOL is seldom in the standard curriculum. The stereotype of COBOL as an outdated skill means new graduates have little incentive to pursue it – unless drawn by niche opportunities. The result is an aging cohort of COBOL experts and not enough juniors to replace them. Some large banks have resorted to luring retired COBOL veterans back as contractors when critical problems arise. There are even consultancy groups (with cheeky names like “COBOL Cowboys”) that specialize in providing senior COBOL programmers on demand. These experts command high hourly rates (often over $100/hour) to fix bugs or interface old code with new systems. This stop-gap measure underscores the skills gap: eventually, the supply of “COBOL cowboys” will dry up as well. One veteran noted, “some of the software I wrote for banks in the 1970s is still being used,” and if something goes wrong, very few people alive know how to fix it. IBM and others have launched training programs to try to close the skills gap – IBM claims to have trained over 180,000 new COBOL developers in the past decade – but it’s unclear if these numbers are sufficient or if those trainees remain in COBOL jobs long-term. The mismatch between demand and supply of COBOL talent is only growing over time (Figure 1).

Figure 1: COBOL Job Demand vs. Developer Supply (2010–2025). This chart illustrates the widening gap between the need for COBOL-skilled programmers and the available workforce. The demand for COBOL developers (yellow line) has gradually increased or remained robust as legacy systems still require maintenance and modernization, whereas the supply of experienced COBOL programmers (orange line) is steadily declining due to retirements and fewer entrants into the field. By 2025, the index of job demand is more than double the index of available talent (2010 baseline = 100), reflecting an acute skills shortage. Organizations are finding it increasingly difficult to fill COBOL-related roles, heightening the urgency to address legacy system risks.

- Lack of Documentation and Knowledge Transfer: A compounding problem related to maintainability and workforce is the lack of up-to-date documentation for many COBOL systems. Decades ago, it was common for critical business logic to reside only in code and the minds of its programmers, with minimal external documentation. As original developers retire, their deep knowledge of the system’s quirks retires with them. In many banks, one might hear anecdotes like “only Alice really understood the settlement batch job, but she left 5 years ago; now we just pray it keeps running.” The Reuters investigation noted that original COBOL programmers “rarely wrote handbooks”, making troubleshooting and understanding of these systems difficult for successors. Over time, some institutions have attempted to create documentation or reverse-engineer specs from the code, but this is painstaking work. Without accurate documentation, every modification to the system is risky – you might not realize the full impact of a change. It also slows down onboarding of any new COBOL hires, who must first decipher how the old code works. This knowledge gap poses a serious operational risk: if something breaks, figuring out why and how to fix it can take far longer than in a well-documented modern system. The self-describing nature of COBOL (“self-documenting” is a term sometimes used, since code reads like English) has helped a bit – experienced COBOL devs often say they can understand any COBOL program given enough time. But when thousands of programs interconnect, more than just code listings are needed to grasp the whole picture.

- Integration Hurdles with Modern Systems: In today’s digital banking environment, legacy COBOL systems do not operate in isolation – they must integrate with modern customer-facing applications, web services, mobile apps, data analytics platforms, and more. This integration is challenging. COBOL systems running on mainframes were originally designed to be siloed, processing transactions in batch or via terminal-based interfaces. Now they’re being asked to provide real-time data to internet banking platforms or to connect with fintech APIs. Wrapping a 1970s COBOL program with a modern API layer is possible (and often done), but it introduces complexity and performance considerations. The old systems may not meet modern expectations for responsiveness or flexibility. For example, a mobile banking app might want to display transaction history by querying the core banking system. If that core is COBOL-based, developers must create middleware to fetch data from the mainframe (perhaps via an intermediary database or message queue) because the COBOL system itself wasn’t built with HTTP/JSON interfaces in mind. Each such integration is a bespoke project. Furthermore, making changes to COBOL systems to support new digital channels can be slow. A bank’s COBOL system might only get updated in quarterly releases, not continuous deployment, which can frustrate fast-moving digital teams. As one report noted, banks are trying to get modern front-end tools to “work seamlessly with old underlying systems”, but it’s a significant undertaking. The push for real-time banking and open banking (where banks expose APIs to third parties) puts pressure on these legacy systems to keep up, often revealing their age.

- Regulatory and Compliance Concerns: Financial institutions operate under intense regulatory scrutiny. Regulators frequently update requirements – whether it’s new reporting standards, data retention policies, or transaction monitoring rules – and banks must update their IT systems accordingly. COBOL systems can be cumbersome to modify quickly for such changes. For instance, when a new anti-money-laundering rule mandates capturing additional transaction data, a COBOL application from the 1980s might need modifications to record that data and include it in reports. Making that change might involve altering data layouts (copybooks), database schemas, and report programs, all of which must be done carefully to avoid breaking existing functionality. The concern is that some banks might struggle to meet regulatory deadlines due to the slowness of changing legacy systems. Regulators have taken note: the GAO has highlighted that aging COBOL systems at federal agencies pose risks to timely upgrades and security patches. Additionally, outdated systems can be more vulnerable to security issues not just because of old code, but because they may not easily support modern encryption or audit capabilities without significant overhaul. Another regulatory angle is operational resilience – regulators in many countries now require banks to have robust disaster recovery and business continuity for critical systems. Ensuring a 40-year-old COBOL system meets modern resiliency standards (like geo-redundancy, rapid failover) can require wrapping it in newer infrastructure or tools. All of these compliance-related needs put strain on legacy systems. Some banks have faced regulatory pressure to modernize, implicitly or explicitly, as regulators warn of the “accumulating risk” of technology debt.

- Cost of Operation: While not explicitly mentioned in the list, it’s worth noting that legacy COBOL systems – especially on mainframes – carry high operational costs. Mainframe hardware and licensing (for COBOL compilers, transaction processors like CICS, database systems like DB2 or IMS) can be very expensive. Many financial institutions spend a large chunk of their IT budget just keeping these systems running (hardware maintenance, software licenses, power and cooling for data centers, etc.). This is tolerable when the system is mission-critical and there’s no alternative, but as budgets tighten, CIOs feel pressure to reduce these costs, especially if newer platforms could run the same workloads more cheaply. The cost factor often catalyzes modernization discussions: for example, could we replatform COBOL workloads to a cloud environment to save money? However, cost considerations cut both ways – replacing a COBOL system has an enormous upfront cost, so organizations often decide it’s cheaper in the short run to keep paying the “COBOL tax” of mainframe operation. It becomes a classic build-vs-buy dilemma, where doing nothing also has a cost (which only grows as hardware ages and skills become scarce).

In summary, COBOL systems in finance are reliable but increasingly brittle assets. They hold years of business knowledge and billions of dollars of transactions, yet each passing year makes them a bit harder to sustain. Maintenance takes longer, skilled personnel are fewer, and the business side is demanding capabilities these systems weren’t designed to deliver. This confluence of challenges has made legacy modernization a top priority for many institutions – a theme we turn to next.

Modernization Strategies for COBOL Systems

Given the critical importance of COBOL applications and the challenges outlined, financial organizations are exploring ways to modernize their legacy systems. Modernization doesn’t necessarily mean discarding COBOL entirely; in many cases it involves evolving these systems to meet current needs. There is a spectrum of strategies available, from small incremental changes to complete overhaul. The main approaches can be categorized as follows: refactoring the existing code, replatforming to new infrastructure, wrapping with APIs to extend functionality, or full replacement of the system. Each approach has its pros and cons, and often organizations will combine strategies in a phased modernization program. Below we describe each strategy and how it’s being applied in the financial industry.

1. Refactoring and Code Transformation: Refactoring involves making structured changes to the code without altering its external behavior. In the context of COBOL, refactoring might mean cleaning up and modularizing monolithic programs, or even converting COBOL code into a modern language while preserving the same logic. For example, a bank might take a 5 million-line COBOL application and systematically convert it into Java or C# using automated tools (sometimes called “transpilers” or code converters), or they might break the COBOL code into more manageable components and rewrite critical pieces manually. The goal is to improve maintainability and portability of the software. A key advantage of refactoring is that it retains the investment in existing business logic – the rules and processes accumulated over decades are carried forward, just expressed in a cleaner or more modern way. This can significantly extend the life of a system and make it easier for non-COBOL developers to work on it (if converted to a modern language). However, refactoring at this scale is non-trivial. It requires rigorous testing to ensure the refactored system behaves exactly like the old one. Given the volume of transactions and money at stake, even minor discrepancies are unacceptable. Refactoring projects often use automated test harnesses to compare outputs from the old and new code for a large number of scenarios. One benefit today is the availability of advanced code analysis tools that can map out a large COBOL codebase, identify dead code, and even suggest refactoring paths. Some organizations choose incremental refactoring – e.g., rewriting one module at a time into a modern language or a more modular COBOL program, deploying it, then moving to the next. This lowers risk versus a big bang rewrite. In practice, refactoring is a painstaking but safer way to modernize; it does not fundamentally change what the system does, only how it is implemented under the hood.

2. Replatforming (Lift-and-Shift to Modern Infrastructure): Replatforming focuses on moving COBOL applications from old hardware (like an on-premise mainframe) to modern, often less expensive platforms, without rewriting the code from scratch. This is sometimes called “lift-and-shift.” For instance, a bank might migrate its COBOL programs and data from an IBM mainframe to a distributed server environment or cloud-based emulated mainframe. Products exist to emulate mainframe instruction sets on Linux or to run COBOL workloads on cloud instances with comparable reliability. Another variant is migrating from a legacy COBOL dialect to a newer COBOL compiler that runs on open systems (for example, moving from IBM z/OS COBOL to Micro Focus COBOL on Windows or Linux). The business logic and code remain COBOL, but the underlying platform changes. The primary motivation here is often cost: running the same COBOL applications on commodity hardware or cloud can be cheaper than maintaining a mainframe data center. It can also help integrate with other modern systems if everything is on a more common platform. Replatforming often yields performance improvements as well, since modern CPUs and storage can be faster (though mainframes are highly optimized, so results vary). The challenge in replatforming is ensuring that the new environment faithfully replicates the behaviors of the old. Mainframe COBOL systems have specific ways of handling data types, transactions (e.g., CICS transactions), and batch scheduling (JCL jobs). When moving to a new platform, those need replacements or virtualization. Companies like IBM have even offered “mainframe as a service” on cloud or tools to assist with such moves. Risk is moderate here: since the code is largely unchanged, the logic remains reliable, but the runtime differences can introduce subtle bugs if not carefully managed. Many organizations start modernization by replatforming because it provides immediate infrastructure savings and sets the stage for further refactoring later. Essentially, they decouple the hardware dependence first, then gradually improve the software.

3. API Wrapping and Legacy Enhancement: A very common strategy in recent years is encapsulation – leaving the COBOL code running as is (often still on the mainframe) but exposing its functions through modern APIs or microservices. This approach is akin to putting a new “skin” on an old engine. For example, an insurance company might wrap their COBOL-based policy system with a set of RESTful APIs. The COBOL program remains the system of record, but now a web or mobile application can call an API which in turn triggers a COBOL transaction (perhaps via a middleware layer or message queue) and gets a response. This strategy allows organizations to rapidly achieve some modernization objectives: they can build new customer-facing portals, mobile apps, or integrate with partners, without having to change the core COBOL logic. It also helps gradually re-architect systems by adding layers that can eventually replace the core. API wrapping typically requires adding middleware such as IBM CICS web services, JSON/XML converters, or using integration platforms that connect to mainframe data. The pros are clear – minimal risk to the stable COBOL system, relatively quick implementation, and immediate improvements in interoperability. It essentially future-proofs the interface of the COBOL system. Many banks have adopted this to enable real-time services; for instance, an API might allow a fintech app to retrieve account info which under the covers runs a COBOL routine on the mainframe. The cons are that it doesn’t reduce the dependency on COBOL at all – the old system is still doing the heavy lifting. Any limitations or inefficiencies in the old code remain. In fact, layering APIs can sometimes expose performance bottlenecks: something that worked fine in batch might struggle when called frequently in real-time. Additionally, introducing an API layer means adding new components to maintain (the API gateways, converters, etc.). Over time, one could end up with a complex web of interconnections that itself becomes a maintenance challenge. Despite those caveats, API wrapping is highly popular because it addresses the immediate integration issue with relatively low cost and risk. It buys time to plan deeper modernization while keeping the business moving forward with new digital initiatives.

4. Full Replacement (Core System Transformation): The most ambitious strategy is to replace COBOL systems entirely – either by rewriting the software from scratch in a modern language or by implementing a commercial off-the-shelf solution to take over the functions. This is often referred to as a “core system replacement” or in drastic terms, a “rip-and-replace”. A number of financial institutions have attempted this route, sometimes successfully, sometimes with great difficulty. A full replacement means the organization identifies a modern target architecture (for example, a Java-based microservices platform, or a modern core banking package like Temenos or SAP), and then migrates all business functionality and data from the COBOL system to the new system. The advantage of a full replacement is that it frees the organization from the constraints of the old COBOL code. It can redesign processes, improve user interfaces, and generally leapfrog to current technology in one giant step. It also potentially future-proofs the organization for a longer horizon – if you replace your 40-year-old system with a brand-new one, you ideally won’t need another core overhaul for a couple of decades. However, this approach is by far the riskiest and costliest. Large-scale core replacement projects in banking often go over budget and take many years. They can be akin to performing open-heart surgery on the bank’s operations – one slip and you have a major incident. The risk of disrupting customer services or losing data is high. There have been notable failures (which we will discuss in case studies) where banks suffered outages and losses due to botched migrations. Even when successful, the costs are staggering – running into hundreds of millions of dollars for big banks. Moreover, during the multi-year transition, the bank typically has to run the old and new systems in parallel (for safety), incurring double costs. It’s for these reasons that some banks outright avoid full replacements unless absolutely necessary. Instead, they might opt for gradual modular replacements – for instance, replace the payments module this year, loans module next year, rather than the entire core at once. Replacing piece by piece can mitigate risk but then one has to build temporary bridges between old and new during the transition. Despite the challenges, a number of forward-looking institutions have done full replacements to achieve long-term agility and eliminate legacy constraints. Those that succeed often report significant improvements in product time-to-market and customer experience. Still, the industry’s collective lesson has been to approach this path with extreme caution.

It’s worth noting that these strategies are not mutually exclusive. In fact, many modernization programs employ a hybrid approach: wrap first to get quick wins, replatform to cheaper infrastructure, refactor select components to more modern code, and eventually replace parts that make sense to replace. The strategy chosen depends on an institution’s risk tolerance, budget, and strategic goals. For example, a bank might decide that its customer information file (CIF) system in COBOL can be wrapped and left as is (since it’s stable), but its payments engine needs a full replacement to meet real-time processing needs. Another bank might eschew replacement entirely and focus on iterative refactoring to slowly transform all its COBOL into Java without ever doing a big bang cutover.

Crucially, any modernization should begin with a thorough assessment of the existing COBOL portfolio – understanding interdependencies, complexity, and business criticality. As one modernization expert noted, jumping straight into rewriting can be “uneconomic and risky,” and it’s better to take a phased approach. Successful projects often start by decomposing the monolith into smaller parts or services (even within the COBOL environment) and then tackling each with the appropriate strategy. Modernization is not just a technology exercise but a business one: it must align with business priorities and often has to happen while the system is in active use (like changing the tires on a moving car).

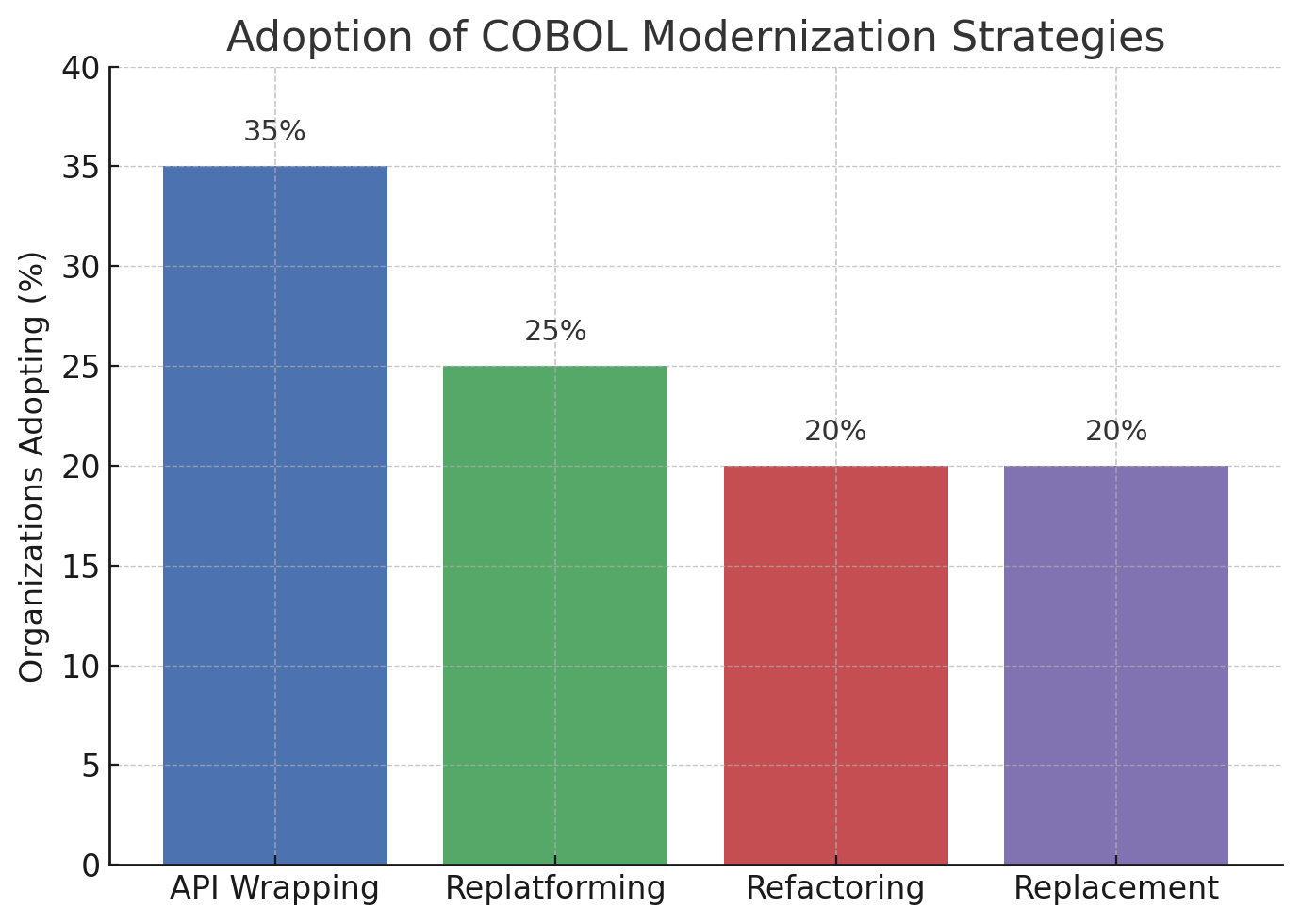

The following chart (Figure 2) illustrates how organizations are adopting these different strategies. It shows a notional breakdown of what percentage of institutions favor each approach in their COBOL modernization efforts, based on industry observations:

Figure 2: Adoption of COBOL Modernization Strategies. This chart shows the approximate adoption rates of various modernization approaches among financial institutions. API Wrapping (35%) – exposing COBOL functions via APIs – is a common first step for many, allowing quick integration of legacy systems with new digital services. Replatforming (25%) – moving COBOL workloads to modern infrastructure or cloud – is also widely pursued, driven by cost reduction goals. Refactoring (20%) – i.e., transforming or rewriting COBOL code into a modern form without changing its functionality – accounts for a significant share, often in targeted parts of the application. Full Replacement (20%) of COBOL systems with new solutions is the least common approach, due to its high risk and cost, but is undertaken by some institutions as a strategic long-term fix. (These percentages are illustrative; many organizations use multiple approaches in combination.)

Case Studies

Modernizing COBOL Systems in Financial Institutions

Many banks and financial institutions have embarked on initiatives to address their COBOL legacy. Some have achieved their goals, whereas others encountered setbacks. Here we highlight a few notable case studies that illustrate the spectrum of outcomes – from successful transformations to costly failures – and the lessons learned from each.

Case Study 1: Commonwealth Bank of Australia (Core Replacement Success). One of the largest and most cited COBOL modernization projects was undertaken by Commonwealth Bank of Australia (CBA). In the mid-2000s, CBA decided to replace its aging COBOL-based core banking system with a modern software platform. This was an ambitious “big bang” replacement strategy. CBA partnered with Accenture and selected a core banking software from SAP to implement. The project ultimately took about five years and cost roughly AUD 1 billion (around USD $750 million). By 2012, CBA had fully migrated to the new system, decommissioning much of its COBOL infrastructure. The outcome was successful in the sense that the bank did not suffer major outages during the transition and it achieved its modernization objectives. CBA reported improved efficiency and ability to roll out new products faster after the new core went live. However, the cost and duration were enormous, underscoring that even a well-run core replacement demands significant resources. CBA’s project is often referenced as a proof that core systems can be replaced, but only with top-level executive commitment, ample funding, and careful execution. It’s worth noting that while CBA moved off COBOL for its core banking, not every peripheral system was replaced – some surrounding systems may still run COBOL, but the heart of its retail bank operations was successfully modernized.

Case Study 2: ING Bank (Automated Code Migration to Java). ING, a major Dutch-headquartered bank with global operations, took a different approach by focusing on refactoring via automated code conversion. In the late 2010s, ING decided to migrate a significant portion of its core banking applications from COBOL on mainframe to Java running on Linux servers. Rather than rewrite everything manually, ING leveraged automated conversion tools to translate COBOL code into Java, preserving the business logic. According to a published success story, ING migrated 1.5 million lines of COBOL code (including online transaction programs using CICS and batch jobs with JCL) to Java. The bank’s mainframe was fully decommissioned, and the new Java-based system has been running in production since February 2022. The project was completed in about 18 months – a relatively short timeline given the scope – thanks in part to automation and focusing on an as-close-to-like-for-like conversion as possible. ING placed heavy emphasis on testing: over 2 billion transactions were cross-checked between the old COBOL system and the new Java system to ensure identical results. This exhaustive testing phase was critical to gain confidence that customers and internal processes would see no difference except the technology under the hood. ING’s case demonstrates that a well-planned refactoring (with modern tools) can work for large-scale systems. The benefit for ING is they now have core banking software in a modern programming ecosystem (Java) which presumably is easier to maintain and integrate. However, it’s important to note that automated conversion often produces code that, while functional, may not be as optimized or idiomatic as if it were designed in Java from scratch. ING likely will continue to refine and modernize that codebase over time. Still, this approach avoided the riskiest parts of a full rewrite by leveraging the proven logic of the existing system and simply changing its form. ING’s successful migration offers hope to others that not all legacy modernization need be “all or nothing” – you can carry forward what works into a new platform if you do it carefully.

Case Study 3: TSB Bank (UK) – Failed Core Migration as a Cautionary Tale. Not all modernization attempts have happy endings. TSB Bank in the United Kingdom provides a sobering example of how things can go wrong. In 2018, TSB (which had been spun off from Lloyds Bank a few years prior) attempted a major IT migration that involved moving off a COBOL-based banking platform inherited from Lloyds to a new system provided by its new parent, Banco Sabadell. This was effectively a core replacement scenario under tight time pressure due to the ownership change. The migration was notoriously problematic: when TSB switched over to the new system in April 2018, customers immediately experienced massive outages and errors. Online and mobile banking were down for days, some customers saw incorrect balances or even accounts that weren’t theirs, and the chaos led to a public relations disaster. It was later revealed that migrating a billion customer records and multiple systems at once was too much, too fast. TSB’s services were disrupted for up to two weeks for many users. The failure not only eroded customer trust but also had significant financial consequences. TSB had to set aside tens of millions for customer redress and tech fixes; reports indicated the bank incurred around £330 million in direct costs from the botched migration, on top of the planned project budget and additional fraud losses during the chaos. The CEO of TSB stepped down amid the fallout. This case study is often cited in boardrooms as a reminder of the risk inherent in core replacements, especially when done in a “big bang” cutover. The lessons include the importance of realistic timelines, perhaps a more phased migration approach, more rigorous testing, and not underestimating data conversion challenges. TSB’s issue wasn’t that COBOL couldn’t be left behind – it was that the execution of doing so was flawed. For our discussion, it emphasizes why many banks are extremely cautious about full replacements and often prefer incremental approaches or heavy rehearsal of cutovers. In regulatory hearings afterward, it was noted that the legacy COBOL system (from Lloyds) was actually stable; the failure occurred in the new replacement. Thus, ironically, the old system was reliable up until they tried to replace it, which underscores the old “if it ain’t broke, don’t fix it” wisdom that often keeps COBOL in place.

Case Study 4: State Government Unemployment Systems (COBOL Crisis of 2020). This example is from the public sector rather than a private financial institution, but it is instructive. During the COVID-19 pandemic in 2020, unemployment insurance systems in many U.S. states were overwhelmed by a sudden surge in claims. States like New Jersey, Kansas, and Connecticut ran these benefit systems on COBOL mainframes that were decades old. The unprecedented volume of claims, plus the need to rapidly implement new federal relief programs (stimulus payments, additional unemployment supplements), put these systems under strain. Headlines were made when governors and state labor departments issued public pleas for COBOL programmers to come out of retirement to help update code for the new programs. The situation exposed how critical and yet fragile these legacy systems were. Here, modernization had been delayed or neglected for years, and the “inaction” led to scrambling in a crisis. Some states had modernization projects in progress to replace or update these COBOL systems, but those were not ready in time and had to be put on hold during the emergency. The result was that changes which would normally take months had to be done in days by a small pool of experts, and performance issues had to be mitigated by adding hardware or rationing system usage. This case demonstrates both the resilience and risk of COBOL: the systems ultimately did get the job done (benefits were paid out, albeit with delays), showing COBOL can handle massive workloads. But the difficulty of quickly modifying them to handle new rules (like the CARES Act provisions) highlighted the inflexibility problem. After 2020, it is reported that many states accelerated plans to modernize these government systems, using a mix of new off-the-shelf software or cloud-based solutions. The key takeaway is that waiting until a crisis (a pandemic, a major law change, etc.) to address COBOL issues is dangerous – modernization or at least thorough contingency planning should happen in a controlled manner, not under emergency conditions.

Case Study 5: Nordea Bank (In-Progress Core Modernization). Nordea, one of the largest banks in the Nordics, embarked on a major core banking transformation in the mid-2010s. Partnering with Accenture and core banking software vendor Temenos, Nordea aimed to replace its multiple legacy systems (including COBOL-based platforms) with a single modern core banking system (Temenos T24). The project was slated to be a multi-year effort completing around 2020. Nordea’s approach was phased – migrating country by country and product by product to the new platform. By 2019, Nordea had started to cut over segments (for example, new savings accounts in one country were on the new system). However, the program proved very challenging; Nordea had to take significant write-downs on the project costs, and progress was slower than anticipated. Ultimately, in 2021 Nordea announced a scale-back of the full replacement vision, opting instead for a more gradual evolution and integration of old and new systems. This case underscores the complexity of core banking projects: even with experienced partners and modern tech, a bank must manage change across multiple jurisdictions and products. It illustrates a middle path where a bank may need to adjust its strategy from an aggressive replacement to a more incremental rollout as realities set in. Nordea’s effort is still notable as one of the largest in Europe, and parts of its operations are now on modern software, but it also retained some legacy components longer than initially planned. For other banks, Nordea’s candid acknowledgment of hurdles provides caution to plan for flexibility and not assume one can flip a switch on an entirely new core easily.

These case studies reveal a few common themes. One is that thorough testing and phased deployment are crucial – both ING and CBA emphasized very extensive testing or gradual go-lives, which helped them succeed, whereas TSB’s troubles were partly due to an insufficiently tested big bang. Another theme is executive support and funding: these projects require large investments and often multi-year commitment where ROI may not be immediate. Institutions that view core modernization as a strategic necessity (even if expensive) tend to allocate the needed resources and tolerate the lengthy timeline, whereas those looking for quick fixes often stumble. The cases also show that there is no one-size-fits-all approach: each organization chose a path that fit its context (CBA a full package replacement, ING an automated refactor, TSB a forced migration with unfortunate execution, Nordea a commercial software rollout, government systems largely doing piecemeal fixes and wraps). A lesson is that incremental approaches generally carry less risk, but even those can be slow and costly; bold approaches can pay off but need the right circumstances and talent.

Future Outlook

Maintain, Migrate, or Retire COBOL?

Looking ahead, what does the future hold for COBOL in financial systems? Will we still be talking about COBOL in banking a decade from now, or will it have finally been supplanted by modern technologies? The answer, most likely, is that COBOL will continue to have a presence, but a diminishing one, as institutions straddle the line between maintenance and migration. Different organizations will choose different paths, but industry trends point to a few likely scenarios for the coming years:

1. Continued Maintenance and Life Extension: A number of institutions will choose to double down on maintaining COBOL systems, at least for the next 5-10 years. For these organizations, the strategy is to manage the risk rather than eliminate it in the short term. This means investing in measures to make COBOL maintenance easier and to address the talent gap. We are already seeing efforts like specialized COBOL training programs for new graduates, often sponsored by large banks or government agencies. Some universities and coding bootcamps have introduced COBOL courses after the 2020 publicity around it, aiming to produce a pipeline of COBOL-capable programmers. Companies are also improving tooling: modern IDEs (Integrated Development Environments) for COBOL, such as Eclipse-based editors with COBOL plugins, are being adopted to provide a more modern development experience (with features like code autocomplete, debugging, etc.). By improving tools and training, organizations can mitigate the skills shortage and potentially make COBOL development more efficient. IBM’s initiative to train 180,000+ COBOL developers in recent yearsis an example of proactively extending the life of COBOL by ensuring humans are available to work with it. Also, vendors like Micro Focus (now OpenText) regularly update their COBOL compilers to support new platforms and integrations, meaning the language is being quietly modernized in capability even if the syntax remains old-fashioned. We can expect COBOL to continue as a strategic asset in many organizations’ IT strategies – indeed, 92% of enterprises in a 2022 survey said their COBOL applications are strategic to their future. As long as that sentiment holds and COBOL systems are meeting business needs, companies will maintain them. Maintenance does not mean stagnation: we will likely see COBOL systems wrapped in more APIs, augmented by AI (some are experimenting with AI tools to parse and document COBOL code automatically), and running on slightly newer infrastructure (some mainframe workloads might move to mainframe-as-a-service models, etc.). In this maintenance-oriented outlook, COBOL might gradually become more of a “back-end engine” that nobody touches frequently, while all new logic is built around it. Essentially, treat it as a black box that you protect and feed, until maybe at some point it truly becomes obsolete.

2. Incremental Migration and Hybrid Architectures: Many organizations will pursue a hybrid approach – neither keeping COBOL everywhere nor eliminating it entirely, but gradually migrating components to modern systems in a piecemeal fashion. This incremental migration means over the next decade, pieces of functionality now in COBOL will be rewritten or moved, one by one. For instance, a bank might decide that its customer-facing channels need real-time data, so it builds a new customer data store (maybe using a modern database) that is kept in sync with the COBOL system, then eventually flips the master record to the new store. Or it might carve out one business line (say, personal loans) and implement a new module for that, while leaving other products on COBOL for the time being. The end state envisioned in such cases is a mixed environment where some core services might still be COBOL-based but others are microservices or cloud applications, all interoperating. This is already happening in many large banks: e.g., new digital banking platforms are layered on top of old cores, gradually absorbing functionality. The presence of robust API gateways and event streaming platforms (like Kafka) makes it easier to keep legacy and new systems in sync in real-time, facilitating a phased migration. Over time, as more modules move off COBOL, the dependency shrinks. Perhaps in 10-15 years, a bank that started with 100% COBOL core might be down to 20% of its transactions still involving COBOL, with the rest on new systems. This slow drain of COBOL usage could make the final cutover (for that remaining 20%) much easier and less risky, as it might not be business-critical by then. The challenge here is sustaining two worlds in parallel for an extended period, which requires good architecture governance and can be costlier in the medium term (due to duplication). But it smooths out the risk and investment over time. A likely trend is that non-critical and new workloads will almost exclusively go to new platforms, so COBOL’s footprint naturally reduces to only the most critical legacy pieces which will be last to move. We also foresee that more automated migration tools (potentially enhanced by AI) will come to market, making code conversion and data migration more reliable – this could accelerate incremental migrations as confidence in automation grows (similar to how ING leveraged a conversion tool).

3. Eventual Replacement and Retirement: For some institutions, especially those where modernization has been ongoing, we will see full retirement of COBOL systems in the not-too-distant future. For example, a few large banks have publicly stated aggressive targets like “eliminate mainframe COBOL by 2030”. Whether those targets hold is debatable, but it signals a desire that within a decade or so, they want to be free of that dependency. As new core banking products (many cloud-native) mature and prove themselves, more banks might take the plunge to adopt them wholesale. It might also happen through generational change: as older executives and IT leaders (who were perhaps more comfortable with COBOL) retire, younger leadership may be more willing to take bold action to replace legacy technology. Additionally, external factors can force retirement – for instance, mergers and acquisitions often lead to system consolidations. If a bank running COBOL is acquired by one running a modern system, the combined entity may choose one over the other. If regulators or governments increase pressure (for example, if they start to mandate technology updates for critical financial market infrastructure), that could also spur action. Ultimately, over a long enough timeline, one expects COBOL usage to recede simply because the people who know it and the hardware that runs it cannot last forever. But “long enough timeline” here could still mean decades. One revealing data point: in the insurance industry, which also heavily used COBOL, there are legacy life insurance policies written in the 1970s that will still pay out benefits in the 2030s and 2040s – the systems that track those might need to remain until the last policy is settled. Similarly, in banking, certain loan systems might need to persist for the life of those loans (30-year mortgages etc.). So some COBOL systems will retire naturally as the business they support winds down. Government agencies have some systems so old (60+ years) that they plan to retire them as soon as replacements are up – for example, the U.S. IRS has been inching towards retiring its 1960s COBOL master file for taxpayer data, with plans (and funding) to finally do so in coming years after numerous delays. The future state in, say, 2040 could be that COBOL is a niche skill used only to maintain historical archives or very niche legacy pockets, much like how today there are still a few people maintaining some assembly language or 1960s code in corners.

However, it’s important to temper expectations: experts have been predicting “the end of COBOL” for decades and have been proven wrong time and again. As far back as 1960, people doubted COBOL’s longevity, yet here we are. Every new tech wave (client-server in the 90s, internet in the 2000s, cloud in the 2010s) prompted folks to say COBOL would finally be replaced, but in each case, integration was chosen over replacement for most core systems. So, in the mid-2020s, while cloud-native banking platforms and AI automation are the latest forces that could reduce COBOL dependency, the pragmatism of financial IT suggests that many COBOL applications will still be around for at least another decade, if not longer, albeit gradually diminishing. In fact, some surveys even show increases in COBOL code volume, as mentioned, which implies institutions are sometimes choosing to extend and invest in COBOL rather than replace it.

The future will likely also include more COBOL interoperability: for example, COBOL running serverless in the cloud (there have been experiments to allow COBOL programs to execute in AWS Lambda or similar services), or using APIs so seamlessly that whether a service is backed by COBOL or not becomes irrelevant to the consumer. If the surrounding ecosystem compensates enough (through caching, APIs, etc.), the pressure to remove COBOL lessens. Additionally, as mainframe vendors adapt pricing (IBM has been making moves to make mainframe costs more consumption-based, to compete with cloud economics), the cost argument might soften, allowing organizations to keep COBOL systems without as much financial penalty.

In conclusion, the outlook for COBOL is a continued slow evolution. We will see a steady migration away in many places, significant efforts to modernize and interface in others, and a small but persistent core that may never fully go away until those organizations themselves sunset. Financial institutions will have to regularly revisit their COBOL strategy, possibly dividing systems into categories: those to maintain, those to migrate, those to replace. The smart strategy is one that is adaptive – recognizing that new tools and solutions are emerging (for example, maybe in a few years AI-driven code translation could dramatically reduce the cost of refactoring, tipping the balance in favor of migration). It’s a space to watch: while COBOL might not be front-page news often, whenever there’s a major tech failing at a bank, COBOL tends to get mentioned, and whenever there’s a major tech success at modernizing a bank, COBOL is in the backdrop of “what we moved away from.”

For IT managers and financial executives, the key is to treat COBOL not as an obsolete relic, but as a legacy asset that must be proactively managed. Those who plan effectively will ensure that their institution can harness the strengths of COBOL (stability, proven logic) while overcoming its weaknesses (inflexibility, scarce skills) through modernization. In doing so, they will gradually position their organizations for a post-COBOL world on their own terms, rather than being forced into it by crisis. And if done right, the transition can be so smooth that the outside world never even notices – customers will simply enjoy continuous, improved services, unaware that the bank quietly changed the engine that has been running their accounts for decades.

Conclusion

COBOL’s story in financial systems is one of remarkable endurance. From its origins in 1959 to its central role in 20th-century banking automation, and even now as a backbone for many critical applications, COBOL has proven to be anything but “dead.” Financial services became the stronghold of COBOL due to the language’s alignment with business needs and the huge investments made in COBOL software during the industry’s formative tech years. Today, that historical legacy is a double-edged sword – providing reliable service but posing modernization dilemmas.

The current state of COBOL in finance is substantial: hundreds of billions of lines of code executing billions of transactions daily, securing trillions of dollars in value. Yet, cracks in the foundation are evident through the challenges of maintaining these aging systems. The shortage of COBOL skills, the archaic nature of some codebases, and integration woes have placed COBOL at a crossroads. Financial institutions can no longer ignore the issue; many are actively strategizing how to cope with or eliminate their COBOL dependency.

We explored several strategies – from gentle refactoring to daring replacements – each with successes and cautionary tales to learn from. The experience of Commonwealth Bank of Australia shows modernization is possible with enough resolve and resources. ING’s journey demonstrates the power of automated refactoring coupled with rigorous testing. The tribulations of TSB highlight the perils of poor execution in migration. These case studies collectively suggest that there is no trivial path, but a well-planned, phased approach stands the best chance of success. Indeed, a recurring lesson is: plan meticulously, test extensively, involve knowledgeable people, and be realistic about timelines.

COBOL’s partnership with mainframe technology means that any decision around COBOL often implicates an institution’s entire IT architecture and operational model. Mainframes continue to prove their worth in certain arenas, and as long as they remain, COBOL likely will too. However, economics and technology trends (like cloud computing) are providing viable alternatives that didn’t exist a decade ago, making migrations more appealing now than before.

The risks of maintaining the status quo – from talent drain to system failure – act as a strong incentive for action. Regulators and competitive pressures will only intensify, nudging organizations to address legacy tech debt one way or another. The future will probably see a reduction in COBOL use, but it will be a measured decline. COBOL is not going to disappear overnight; instead, its footprint will shrink year by year as modernized components take over functions. In 2030, we will likely still see COBOL in the largest banks, but perhaps playing a smaller role, interfaced seamlessly with modern systems. By 2040, one can envision COBOL being truly niche, but given its history, one hesitates to make a definitive prediction – after all, similar predictions were made in 2000, yet here we are in 2025 with COBOL still critical.

For IT managers and executives reading this, the key takeaway is the importance of having a COBOL game plan. Whether your strategy is to maintain (but reinforce) or to migrate (gradually or via a big project), it must be deliberate. Assess your risk, know your COBOL inventory, and understand your options. Allocate budget for preliminary steps like creating documentation, tests, and interface layers – these will pay off whichever path you choose. Also, consider the human element: invest in training a new generation (or partnering with firms that have COBOL talent) now, so you’re not caught flat-footed in a few years.

COBOL in financial systems has a future – not as a growth technology, but as a legacy that will be with us until it’s systematically dealt with. The journey to move off COBOL is a marathon, not a sprint, and some institutions are just at the starting blocks while others are halfway through. By learning from past experiences and leveraging modern tools and strategies, organizations can ensure that this transition, however long it takes, is smooth and secure. And for those that choose to keep COBOL around, it will need care and feeding like any other critical asset.

In conclusion, COBOL’s dominance in financial services was well-earned through historical circumstance and technical strengths. Its continued prevalence is a reality that must be managed, not ignored. With prudent planning, firms can mitigate legacy risks and progressively modernize their IT landscape. COBOL might be a 60-year-old language, but as the saying goes, “legacy code is code that works.” The mission for today’s technology leaders is to ensure that this working code does not hinder future innovation – either by transforming it or by transitioning away from it in a controlled manner. The coming decade will be crucial in determining how the next chapter of COBOL’s story in finance unfolds – whether it will finally ride off into a well-deserved sunset, or remain, quietly running in the background, as the reliable workhorse it has always been.

References

- IBM COBOL Solutions

https://www.ibm.com/products/cobol-compiler - Micro Focus COBOL (now part of OpenText)

https://www.microfocus.com/en-us/products/cobol/overview - COBOL Still Powers the Global Economy

https://www.reuters.com/investigates/special-report/usa-states-cobol - COBOL: The Code That’s Running Your Life

https://www.theverge.com/21287881/cobol-programming-language-mainframes-banks - Why COBOL Is Still Critical

https://www.techrepublic.com/article/why-cobol-is-still-critical-to-modern-enterprise-computing - Commonwealth Bank’s Core Banking Transformation

https://www.commbank.com.au/articles/newsroom/2020/10/corStates Scramble to Find COBOL Programmers

https://www.cnbc.com/2020/04/09/coronavirus-unemployment-states-turn-to-cobol.html - IBM Z Mainframe Overview

https://www.ibm.com/it-infrastructure/z - Core Banking Modernization Insights

https://www.accenture.com/us-en/insights/banking/core-modernization - Inside ING’s COBOL to Java Migration

https://www.computerworld.com/article/3670198/ing-completes-cobol-to-java-migration.html - TSB IT Failure Case Study

https://www.bbc.com/news/technology-43864710 - Modernizing Legacy Systems

https://www2.deloitte.com/us/en/pages/consulting/articles/legacy-modernization.html - GAO Report on Federal IT Systems

https://www.gao.gov/products/gao-19-471 - Why COBOL Won’t Die

https://news.ycombinator.com/item?id=25371686 - Migrating Off Mainframes

https://www.oreilly.com/library/view/migrating-off-the/9781492077497